+

+## Acknowledge

+

+1. We borrowed a lot of code from [FunASR](https://github.com/modelscope/FunASR).

+2. We borrowed a lot of code from [FunCodec](https://github.com/modelscope/FunCodec).

+3. We borrowed a lot of code from [Matcha-TTS](https://github.com/shivammehta25/Matcha-TTS).

+4. We borrowed a lot of code from [AcademiCodec](https://github.com/yangdongchao/AcademiCodec).

+5. We borrowed a lot of code from [WeNet](https://github.com/wenet-e2e/wenet).

+

+## Disclaimer

+The content provided above is for academic purposes only and is intended to demonstrate technical capabilities. Some examples are sourced from the internet. If any content infringes on your rights, please contact us to request its removal.

diff --git a/cosyvoice/__init__.py b/cosyvoice/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/cosyvoice/__pycache__/__init__.cpython-310.pyc b/cosyvoice/__pycache__/__init__.cpython-310.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..e0c5148c2b4dafe98d3a6be683f4192d577b9f4d

Binary files /dev/null and b/cosyvoice/__pycache__/__init__.cpython-310.pyc differ

diff --git a/cosyvoice/__pycache__/__init__.cpython-38.pyc b/cosyvoice/__pycache__/__init__.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..66096c4ee88c66fb7a5180d0334398712d1d88eb

Binary files /dev/null and b/cosyvoice/__pycache__/__init__.cpython-38.pyc differ

diff --git a/cosyvoice/bin/average_model.py b/cosyvoice/bin/average_model.py

new file mode 100644

index 0000000000000000000000000000000000000000..d095dcd99f915f0ffdbc3a0c14fcb6f8db900be0

--- /dev/null

+++ b/cosyvoice/bin/average_model.py

@@ -0,0 +1,92 @@

+# Copyright (c) 2020 Mobvoi Inc (Di Wu)

+# Copyright (c) 2024 Alibaba Inc (authors: Xiang Lyu)

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import os

+import argparse

+import glob

+

+import yaml

+import torch

+

+

+def get_args():

+ parser = argparse.ArgumentParser(description='average model')

+ parser.add_argument('--dst_model', required=True, help='averaged model')

+ parser.add_argument('--src_path',

+ required=True,

+ help='src model path for average')

+ parser.add_argument('--val_best',

+ action="store_true",

+ help='averaged model')

+ parser.add_argument('--num',

+ default=5,

+ type=int,

+ help='nums for averaged model')

+

+ args = parser.parse_args()

+ print(args)

+ return args

+

+

+def main():

+ args = get_args()

+ val_scores = []

+ if args.val_best:

+ yamls = glob.glob('{}/*.yaml'.format(args.src_path))

+ yamls = [

+ f for f in yamls

+ if not (os.path.basename(f).startswith('train')

+ or os.path.basename(f).startswith('init'))

+ ]

+ for y in yamls:

+ with open(y, 'r') as f:

+ dic_yaml = yaml.load(f, Loader=yaml.BaseLoader)

+ loss = float(dic_yaml['loss_dict']['loss'])

+ epoch = int(dic_yaml['epoch'])

+ step = int(dic_yaml['step'])

+ tag = dic_yaml['tag']

+ val_scores += [[epoch, step, loss, tag]]

+ sorted_val_scores = sorted(val_scores,

+ key=lambda x: x[2],

+ reverse=False)

+ print("best val (epoch, step, loss, tag) = " +

+ str(sorted_val_scores[:args.num]))

+ path_list = [

+ args.src_path + '/epoch_{}_whole.pt'.format(score[0])

+ for score in sorted_val_scores[:args.num]

+ ]

+ print(path_list)

+ avg = {}

+ num = args.num

+ assert num == len(path_list)

+ for path in path_list:

+ print('Processing {}'.format(path))

+ states = torch.load(path, map_location=torch.device('cpu'))

+ for k in states.keys():

+ if k not in avg.keys():

+ avg[k] = states[k].clone()

+ else:

+ avg[k] += states[k]

+ # average

+ for k in avg.keys():

+ if avg[k] is not None:

+ # pytorch 1.6 use true_divide instead of /=

+ avg[k] = torch.true_divide(avg[k], num)

+ print('Saving to {}'.format(args.dst_model))

+ torch.save(avg, args.dst_model)

+

+

+if __name__ == '__main__':

+ main()

diff --git a/cosyvoice/bin/export_jit.py b/cosyvoice/bin/export_jit.py

new file mode 100644

index 0000000000000000000000000000000000000000..ddd486e97117f2086075ba90726e09edf195b7f3

--- /dev/null

+++ b/cosyvoice/bin/export_jit.py

@@ -0,0 +1,91 @@

+# Copyright (c) 2024 Alibaba Inc (authors: Xiang Lyu)

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from __future__ import print_function

+

+import argparse

+import logging

+logging.getLogger('matplotlib').setLevel(logging.WARNING)

+import os

+import sys

+import torch

+ROOT_DIR = os.path.dirname(os.path.abspath(__file__))

+sys.path.append('{}/../..'.format(ROOT_DIR))

+sys.path.append('{}/../../third_party/Matcha-TTS'.format(ROOT_DIR))

+from cosyvoice.cli.cosyvoice import CosyVoice, CosyVoice2

+

+

+def get_args():

+ parser = argparse.ArgumentParser(description='export your model for deployment')

+ parser.add_argument('--model_dir',

+ type=str,

+ default='pretrained_models/CosyVoice-300M',

+ help='local path')

+ args = parser.parse_args()

+ print(args)

+ return args

+

+

+def get_optimized_script(model, preserved_attrs=[]):

+ script = torch.jit.script(model)

+ if preserved_attrs != []:

+ script = torch.jit.freeze(script, preserved_attrs=preserved_attrs)

+ else:

+ script = torch.jit.freeze(script)

+ script = torch.jit.optimize_for_inference(script)

+ return script

+

+

+def main():

+ args = get_args()

+ logging.basicConfig(level=logging.DEBUG,

+ format='%(asctime)s %(levelname)s %(message)s')

+

+ torch._C._jit_set_fusion_strategy([('STATIC', 1)])

+ torch._C._jit_set_profiling_mode(False)

+ torch._C._jit_set_profiling_executor(False)

+

+ try:

+ model = CosyVoice(args.model_dir)

+ except Exception:

+ try:

+ model = CosyVoice2(args.model_dir)

+ except Exception:

+ raise TypeError('no valid model_type!')

+

+ if not isinstance(model, CosyVoice2):

+ # 1. export llm text_encoder

+ llm_text_encoder = model.model.llm.text_encoder

+ script = get_optimized_script(llm_text_encoder)

+ script.save('{}/llm.text_encoder.fp32.zip'.format(args.model_dir))

+ script = get_optimized_script(llm_text_encoder.half())

+ script.save('{}/llm.text_encoder.fp16.zip'.format(args.model_dir))

+

+ # 2. export llm llm

+ llm_llm = model.model.llm.llm

+ script = get_optimized_script(llm_llm, ['forward_chunk'])

+ script.save('{}/llm.llm.fp32.zip'.format(args.model_dir))

+ script = get_optimized_script(llm_llm.half(), ['forward_chunk'])

+ script.save('{}/llm.llm.fp16.zip'.format(args.model_dir))

+

+ # 3. export flow encoder

+ flow_encoder = model.model.flow.encoder

+ script = get_optimized_script(flow_encoder)

+ script.save('{}/flow.encoder.fp32.zip'.format(args.model_dir))

+ script = get_optimized_script(flow_encoder.half())

+ script.save('{}/flow.encoder.fp16.zip'.format(args.model_dir))

+

+

+if __name__ == '__main__':

+ main()

diff --git a/cosyvoice/bin/export_onnx.py b/cosyvoice/bin/export_onnx.py

new file mode 100644

index 0000000000000000000000000000000000000000..9ddd358949d56ebbcded651114e84789e2b908ef

--- /dev/null

+++ b/cosyvoice/bin/export_onnx.py

@@ -0,0 +1,116 @@

+# Copyright (c) 2024 Antgroup Inc (authors: Zhoubofan, hexisyztem@icloud.com)

+# Copyright (c) 2024 Alibaba Inc (authors: Xiang Lyu)

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from __future__ import print_function

+

+import argparse

+import logging

+logging.getLogger('matplotlib').setLevel(logging.WARNING)

+import os

+import sys

+import onnxruntime

+import random

+import torch

+from tqdm import tqdm

+ROOT_DIR = os.path.dirname(os.path.abspath(__file__))

+sys.path.append('{}/../..'.format(ROOT_DIR))

+sys.path.append('{}/../../third_party/Matcha-TTS'.format(ROOT_DIR))

+from cosyvoice.cli.cosyvoice import CosyVoice, CosyVoice2

+

+

+def get_dummy_input(batch_size, seq_len, out_channels, device):

+ x = torch.rand((batch_size, out_channels, seq_len), dtype=torch.float32, device=device)

+ mask = torch.ones((batch_size, 1, seq_len), dtype=torch.float32, device=device)

+ mu = torch.rand((batch_size, out_channels, seq_len), dtype=torch.float32, device=device)

+ t = torch.rand((batch_size), dtype=torch.float32, device=device)

+ spks = torch.rand((batch_size, out_channels), dtype=torch.float32, device=device)

+ cond = torch.rand((batch_size, out_channels, seq_len), dtype=torch.float32, device=device)

+ return x, mask, mu, t, spks, cond

+

+

+def get_args():

+ parser = argparse.ArgumentParser(description='export your model for deployment')

+ parser.add_argument('--model_dir',

+ type=str,

+ default='pretrained_models/CosyVoice-300M',

+ help='local path')

+ args = parser.parse_args()

+ print(args)

+ return args

+

+

+def main():

+ args = get_args()

+ logging.basicConfig(level=logging.DEBUG,

+ format='%(asctime)s %(levelname)s %(message)s')

+

+ try:

+ model = CosyVoice(args.model_dir)

+ except Exception:

+ try:

+ model = CosyVoice2(args.model_dir)

+ except Exception:

+ raise TypeError('no valid model_type!')

+

+ # 1. export flow decoder estimator

+ estimator = model.model.flow.decoder.estimator

+

+ device = model.model.device

+ batch_size, seq_len = 2, 256

+ out_channels = model.model.flow.decoder.estimator.out_channels

+ x, mask, mu, t, spks, cond = get_dummy_input(batch_size, seq_len, out_channels, device)

+ torch.onnx.export(

+ estimator,

+ (x, mask, mu, t, spks, cond),

+ '{}/flow.decoder.estimator.fp32.onnx'.format(args.model_dir),

+ export_params=True,

+ opset_version=18,

+ do_constant_folding=True,

+ input_names=['x', 'mask', 'mu', 't', 'spks', 'cond'],

+ output_names=['estimator_out'],

+ dynamic_axes={

+ 'x': {2: 'seq_len'},

+ 'mask': {2: 'seq_len'},

+ 'mu': {2: 'seq_len'},

+ 'cond': {2: 'seq_len'},

+ 'estimator_out': {2: 'seq_len'},

+ }

+ )

+

+ # 2. test computation consistency

+ option = onnxruntime.SessionOptions()

+ option.graph_optimization_level = onnxruntime.GraphOptimizationLevel.ORT_ENABLE_ALL

+ option.intra_op_num_threads = 1

+ providers = ['CUDAExecutionProvider' if torch.cuda.is_available() else 'CPUExecutionProvider']

+ estimator_onnx = onnxruntime.InferenceSession('{}/flow.decoder.estimator.fp32.onnx'.format(args.model_dir),

+ sess_options=option, providers=providers)

+

+ for _ in tqdm(range(10)):

+ x, mask, mu, t, spks, cond = get_dummy_input(batch_size, random.randint(16, 512), out_channels, device)

+ output_pytorch = estimator(x, mask, mu, t, spks, cond)

+ ort_inputs = {

+ 'x': x.cpu().numpy(),

+ 'mask': mask.cpu().numpy(),

+ 'mu': mu.cpu().numpy(),

+ 't': t.cpu().numpy(),

+ 'spks': spks.cpu().numpy(),

+ 'cond': cond.cpu().numpy()

+ }

+ output_onnx = estimator_onnx.run(None, ort_inputs)[0]

+ torch.testing.assert_allclose(output_pytorch, torch.from_numpy(output_onnx).to(device), rtol=1e-2, atol=1e-4)

+

+

+if __name__ == "__main__":

+ main()

diff --git a/cosyvoice/bin/export_trt.sh b/cosyvoice/bin/export_trt.sh

new file mode 100644

index 0000000000000000000000000000000000000000..808d02a6e927fe00da51c56f2f4c9f8ccc7b4ba5

--- /dev/null

+++ b/cosyvoice/bin/export_trt.sh

@@ -0,0 +1,10 @@

+#!/bin/bash

+# Copyright 2024 Alibaba Inc. All Rights Reserved.

+# download tensorrt from https://developer.nvidia.com/tensorrt/download/10x, check your system and cuda for compatibability

+# for example for linux + cuda12.4, you can download https://developer.nvidia.com/downloads/compute/machine-learning/tensorrt/10.0.1/tars/TensorRT-10.0.1.6.Linux.x86_64-gnu.cuda-12.4.tar.gz

+TRT_DIR=

+

+## Acknowledge

+

+1. We borrowed a lot of code from [FunASR](https://github.com/modelscope/FunASR).

+2. We borrowed a lot of code from [FunCodec](https://github.com/modelscope/FunCodec).

+3. We borrowed a lot of code from [Matcha-TTS](https://github.com/shivammehta25/Matcha-TTS).

+4. We borrowed a lot of code from [AcademiCodec](https://github.com/yangdongchao/AcademiCodec).

+5. We borrowed a lot of code from [WeNet](https://github.com/wenet-e2e/wenet).

+

+## Disclaimer

+The content provided above is for academic purposes only and is intended to demonstrate technical capabilities. Some examples are sourced from the internet. If any content infringes on your rights, please contact us to request its removal.

diff --git a/cosyvoice/__init__.py b/cosyvoice/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/cosyvoice/__pycache__/__init__.cpython-310.pyc b/cosyvoice/__pycache__/__init__.cpython-310.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..e0c5148c2b4dafe98d3a6be683f4192d577b9f4d

Binary files /dev/null and b/cosyvoice/__pycache__/__init__.cpython-310.pyc differ

diff --git a/cosyvoice/__pycache__/__init__.cpython-38.pyc b/cosyvoice/__pycache__/__init__.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..66096c4ee88c66fb7a5180d0334398712d1d88eb

Binary files /dev/null and b/cosyvoice/__pycache__/__init__.cpython-38.pyc differ

diff --git a/cosyvoice/bin/average_model.py b/cosyvoice/bin/average_model.py

new file mode 100644

index 0000000000000000000000000000000000000000..d095dcd99f915f0ffdbc3a0c14fcb6f8db900be0

--- /dev/null

+++ b/cosyvoice/bin/average_model.py

@@ -0,0 +1,92 @@

+# Copyright (c) 2020 Mobvoi Inc (Di Wu)

+# Copyright (c) 2024 Alibaba Inc (authors: Xiang Lyu)

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import os

+import argparse

+import glob

+

+import yaml

+import torch

+

+

+def get_args():

+ parser = argparse.ArgumentParser(description='average model')

+ parser.add_argument('--dst_model', required=True, help='averaged model')

+ parser.add_argument('--src_path',

+ required=True,

+ help='src model path for average')

+ parser.add_argument('--val_best',

+ action="store_true",

+ help='averaged model')

+ parser.add_argument('--num',

+ default=5,

+ type=int,

+ help='nums for averaged model')

+

+ args = parser.parse_args()

+ print(args)

+ return args

+

+

+def main():

+ args = get_args()

+ val_scores = []

+ if args.val_best:

+ yamls = glob.glob('{}/*.yaml'.format(args.src_path))

+ yamls = [

+ f for f in yamls

+ if not (os.path.basename(f).startswith('train')

+ or os.path.basename(f).startswith('init'))

+ ]

+ for y in yamls:

+ with open(y, 'r') as f:

+ dic_yaml = yaml.load(f, Loader=yaml.BaseLoader)

+ loss = float(dic_yaml['loss_dict']['loss'])

+ epoch = int(dic_yaml['epoch'])

+ step = int(dic_yaml['step'])

+ tag = dic_yaml['tag']

+ val_scores += [[epoch, step, loss, tag]]

+ sorted_val_scores = sorted(val_scores,

+ key=lambda x: x[2],

+ reverse=False)

+ print("best val (epoch, step, loss, tag) = " +

+ str(sorted_val_scores[:args.num]))

+ path_list = [

+ args.src_path + '/epoch_{}_whole.pt'.format(score[0])

+ for score in sorted_val_scores[:args.num]

+ ]

+ print(path_list)

+ avg = {}

+ num = args.num

+ assert num == len(path_list)

+ for path in path_list:

+ print('Processing {}'.format(path))

+ states = torch.load(path, map_location=torch.device('cpu'))

+ for k in states.keys():

+ if k not in avg.keys():

+ avg[k] = states[k].clone()

+ else:

+ avg[k] += states[k]

+ # average

+ for k in avg.keys():

+ if avg[k] is not None:

+ # pytorch 1.6 use true_divide instead of /=

+ avg[k] = torch.true_divide(avg[k], num)

+ print('Saving to {}'.format(args.dst_model))

+ torch.save(avg, args.dst_model)

+

+

+if __name__ == '__main__':

+ main()

diff --git a/cosyvoice/bin/export_jit.py b/cosyvoice/bin/export_jit.py

new file mode 100644

index 0000000000000000000000000000000000000000..ddd486e97117f2086075ba90726e09edf195b7f3

--- /dev/null

+++ b/cosyvoice/bin/export_jit.py

@@ -0,0 +1,91 @@

+# Copyright (c) 2024 Alibaba Inc (authors: Xiang Lyu)

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from __future__ import print_function

+

+import argparse

+import logging

+logging.getLogger('matplotlib').setLevel(logging.WARNING)

+import os

+import sys

+import torch

+ROOT_DIR = os.path.dirname(os.path.abspath(__file__))

+sys.path.append('{}/../..'.format(ROOT_DIR))

+sys.path.append('{}/../../third_party/Matcha-TTS'.format(ROOT_DIR))

+from cosyvoice.cli.cosyvoice import CosyVoice, CosyVoice2

+

+

+def get_args():

+ parser = argparse.ArgumentParser(description='export your model for deployment')

+ parser.add_argument('--model_dir',

+ type=str,

+ default='pretrained_models/CosyVoice-300M',

+ help='local path')

+ args = parser.parse_args()

+ print(args)

+ return args

+

+

+def get_optimized_script(model, preserved_attrs=[]):

+ script = torch.jit.script(model)

+ if preserved_attrs != []:

+ script = torch.jit.freeze(script, preserved_attrs=preserved_attrs)

+ else:

+ script = torch.jit.freeze(script)

+ script = torch.jit.optimize_for_inference(script)

+ return script

+

+

+def main():

+ args = get_args()

+ logging.basicConfig(level=logging.DEBUG,

+ format='%(asctime)s %(levelname)s %(message)s')

+

+ torch._C._jit_set_fusion_strategy([('STATIC', 1)])

+ torch._C._jit_set_profiling_mode(False)

+ torch._C._jit_set_profiling_executor(False)

+

+ try:

+ model = CosyVoice(args.model_dir)

+ except Exception:

+ try:

+ model = CosyVoice2(args.model_dir)

+ except Exception:

+ raise TypeError('no valid model_type!')

+

+ if not isinstance(model, CosyVoice2):

+ # 1. export llm text_encoder

+ llm_text_encoder = model.model.llm.text_encoder

+ script = get_optimized_script(llm_text_encoder)

+ script.save('{}/llm.text_encoder.fp32.zip'.format(args.model_dir))

+ script = get_optimized_script(llm_text_encoder.half())

+ script.save('{}/llm.text_encoder.fp16.zip'.format(args.model_dir))

+

+ # 2. export llm llm

+ llm_llm = model.model.llm.llm

+ script = get_optimized_script(llm_llm, ['forward_chunk'])

+ script.save('{}/llm.llm.fp32.zip'.format(args.model_dir))

+ script = get_optimized_script(llm_llm.half(), ['forward_chunk'])

+ script.save('{}/llm.llm.fp16.zip'.format(args.model_dir))

+

+ # 3. export flow encoder

+ flow_encoder = model.model.flow.encoder

+ script = get_optimized_script(flow_encoder)

+ script.save('{}/flow.encoder.fp32.zip'.format(args.model_dir))

+ script = get_optimized_script(flow_encoder.half())

+ script.save('{}/flow.encoder.fp16.zip'.format(args.model_dir))

+

+

+if __name__ == '__main__':

+ main()

diff --git a/cosyvoice/bin/export_onnx.py b/cosyvoice/bin/export_onnx.py

new file mode 100644

index 0000000000000000000000000000000000000000..9ddd358949d56ebbcded651114e84789e2b908ef

--- /dev/null

+++ b/cosyvoice/bin/export_onnx.py

@@ -0,0 +1,116 @@

+# Copyright (c) 2024 Antgroup Inc (authors: Zhoubofan, hexisyztem@icloud.com)

+# Copyright (c) 2024 Alibaba Inc (authors: Xiang Lyu)

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from __future__ import print_function

+

+import argparse

+import logging

+logging.getLogger('matplotlib').setLevel(logging.WARNING)

+import os

+import sys

+import onnxruntime

+import random

+import torch

+from tqdm import tqdm

+ROOT_DIR = os.path.dirname(os.path.abspath(__file__))

+sys.path.append('{}/../..'.format(ROOT_DIR))

+sys.path.append('{}/../../third_party/Matcha-TTS'.format(ROOT_DIR))

+from cosyvoice.cli.cosyvoice import CosyVoice, CosyVoice2

+

+

+def get_dummy_input(batch_size, seq_len, out_channels, device):

+ x = torch.rand((batch_size, out_channels, seq_len), dtype=torch.float32, device=device)

+ mask = torch.ones((batch_size, 1, seq_len), dtype=torch.float32, device=device)

+ mu = torch.rand((batch_size, out_channels, seq_len), dtype=torch.float32, device=device)

+ t = torch.rand((batch_size), dtype=torch.float32, device=device)

+ spks = torch.rand((batch_size, out_channels), dtype=torch.float32, device=device)

+ cond = torch.rand((batch_size, out_channels, seq_len), dtype=torch.float32, device=device)

+ return x, mask, mu, t, spks, cond

+

+

+def get_args():

+ parser = argparse.ArgumentParser(description='export your model for deployment')

+ parser.add_argument('--model_dir',

+ type=str,

+ default='pretrained_models/CosyVoice-300M',

+ help='local path')

+ args = parser.parse_args()

+ print(args)

+ return args

+

+

+def main():

+ args = get_args()

+ logging.basicConfig(level=logging.DEBUG,

+ format='%(asctime)s %(levelname)s %(message)s')

+

+ try:

+ model = CosyVoice(args.model_dir)

+ except Exception:

+ try:

+ model = CosyVoice2(args.model_dir)

+ except Exception:

+ raise TypeError('no valid model_type!')

+

+ # 1. export flow decoder estimator

+ estimator = model.model.flow.decoder.estimator

+

+ device = model.model.device

+ batch_size, seq_len = 2, 256

+ out_channels = model.model.flow.decoder.estimator.out_channels

+ x, mask, mu, t, spks, cond = get_dummy_input(batch_size, seq_len, out_channels, device)

+ torch.onnx.export(

+ estimator,

+ (x, mask, mu, t, spks, cond),

+ '{}/flow.decoder.estimator.fp32.onnx'.format(args.model_dir),

+ export_params=True,

+ opset_version=18,

+ do_constant_folding=True,

+ input_names=['x', 'mask', 'mu', 't', 'spks', 'cond'],

+ output_names=['estimator_out'],

+ dynamic_axes={

+ 'x': {2: 'seq_len'},

+ 'mask': {2: 'seq_len'},

+ 'mu': {2: 'seq_len'},

+ 'cond': {2: 'seq_len'},

+ 'estimator_out': {2: 'seq_len'},

+ }

+ )

+

+ # 2. test computation consistency

+ option = onnxruntime.SessionOptions()

+ option.graph_optimization_level = onnxruntime.GraphOptimizationLevel.ORT_ENABLE_ALL

+ option.intra_op_num_threads = 1

+ providers = ['CUDAExecutionProvider' if torch.cuda.is_available() else 'CPUExecutionProvider']

+ estimator_onnx = onnxruntime.InferenceSession('{}/flow.decoder.estimator.fp32.onnx'.format(args.model_dir),

+ sess_options=option, providers=providers)

+

+ for _ in tqdm(range(10)):

+ x, mask, mu, t, spks, cond = get_dummy_input(batch_size, random.randint(16, 512), out_channels, device)

+ output_pytorch = estimator(x, mask, mu, t, spks, cond)

+ ort_inputs = {

+ 'x': x.cpu().numpy(),

+ 'mask': mask.cpu().numpy(),

+ 'mu': mu.cpu().numpy(),

+ 't': t.cpu().numpy(),

+ 'spks': spks.cpu().numpy(),

+ 'cond': cond.cpu().numpy()

+ }

+ output_onnx = estimator_onnx.run(None, ort_inputs)[0]

+ torch.testing.assert_allclose(output_pytorch, torch.from_numpy(output_onnx).to(device), rtol=1e-2, atol=1e-4)

+

+

+if __name__ == "__main__":

+ main()

diff --git a/cosyvoice/bin/export_trt.sh b/cosyvoice/bin/export_trt.sh

new file mode 100644

index 0000000000000000000000000000000000000000..808d02a6e927fe00da51c56f2f4c9f8ccc7b4ba5

--- /dev/null

+++ b/cosyvoice/bin/export_trt.sh

@@ -0,0 +1,10 @@

+#!/bin/bash

+# Copyright 2024 Alibaba Inc. All Rights Reserved.

+# download tensorrt from https://developer.nvidia.com/tensorrt/download/10x, check your system and cuda for compatibability

+# for example for linux + cuda12.4, you can download https://developer.nvidia.com/downloads/compute/machine-learning/tensorrt/10.0.1/tars/TensorRT-10.0.1.6.Linux.x86_64-gnu.cuda-12.4.tar.gz

+TRT_DIR=

+

+ """)

+

+ with gr.Column(scale=1, elem_classes="output-section"):

+ gr.Markdown("### 输出结果")

+ tts_base_output = gr.Audio(

+ type="filepath",

+ label="生成语音",

+ elem_id="tts_output_audio",

+ interactive=False

+ )

+ tts_base_button = gr.Button(

+ "🚀 生成语音",

+ variant="primary",

+ elem_classes="btn-generate"

+ )

+ gr.Examples(

+ examples=[

+ ["大家好,欢迎使用Marco-Voice语音合成系统,这是一个强大的语音生成工具。", "徐志胜"],

+ ["科技改变生活,创新引领未来。人工智能正在深刻改变我们的世界。", "李雪琴"],

+ ["在这个充满机遇的时代,我们要勇于探索,敢于创新,不断突破自我。", "范志毅"]

+ ],

+ inputs=[tts_text_v1, speaker_v1],

+ label="示例文本"

+ )

+

+ # Tab 2: 多语种合成

+ with gr.TabItem("🌍 多语种合成", id=1):

+ with gr.Row():

+ with gr.Column(scale=2, elem_classes="input-section"):

+ gr.Markdown("### 输入设置")

+ tts_text_sft = gr.Textbox(

+ lines=3,

+ placeholder="请输入要合成的文本内容...",

+ label="合成文本",

+ value="Hello, welcome to Marco-Voice text-to-speech system. This is a powerful multilingual TTS tool."

+ )

+

+ speaker_sft = gr.Dropdown(

+ choices=["中文男", "中文女", "英文男", "英文女", "韩语女", "日语男"],

+ value="英文男",

+ label="说话人",

+ info="选择语言和性别"

+ )

+

+ gr.Markdown("""

+ 模型说明: 此模型使用零样本音色克隆技术,只需3-10秒参考音频即可模仿目标音色。

+

+

+ """)

+

+ with gr.Column(scale=1, elem_classes="output-section"):

+ gr.Markdown("### 输出结果")

+ tts_sft_output = gr.Audio(

+ type="numpy",

+ label="生成语音",

+ interactive=False

+ )

+ tts_sft_button = gr.Button(

+ "🚀 生成语音",

+ variant="primary",

+ elem_classes="btn-generate"

+ )

+ gr.Examples(

+ examples=[

+ ["Hello, welcome to Marco-Voice text-to-speech system.", "英文男"],

+ ["こんにちは、Marco-Voiceテキスト読み上げシステムへようこそ。", "日语男"],

+ ["안녕하세요, Marco-Voice 텍스트 음성 변환 시스템에 오신 것을 환영합니다.", "韩语女"]

+ ],

+ inputs=[tts_text_sft, speaker_sft],

+ label="多语种示例"

+ )

+

+ # Tab 3: 情感控制

+ with gr.TabItem("😄 情感控制", id=2):

+ with gr.Row():

+ with gr.Column(scale=2, elem_classes="input-section"):

+ gr.Markdown("### 输入设置")

+ tts_text_v3 = gr.Textbox(

+ lines=3,

+ placeholder="请输入要合成的文本内容...",

+ label="合成文本",

+ value="这真是太令人兴奋了!我们刚刚完成了一个重大突破!"

+ )

+

+ with gr.Row():

+ with gr.Column():

+ speed_v3 = gr.Slider(

+ minimum=0.5,

+ maximum=2.0,

+ value=1.0,

+ step=0.1,

+ label="语速控制"

+ )

+ with gr.Column():

+ emotion_v3 = gr.Radio(

+ choices=["Angry", "Happy", "Surprise", "Sad"],

+ value="Happy",

+ label="情感选择"

+ )

+

+ with gr.Row():

+ with gr.Column():

+ speaker_v3 = gr.Dropdown(

+ choices=names,

+ value="徐志胜",

+ label="预设音色"

+ )

+ with gr.Column():

+ gr.Markdown("### 或使用自定义音色")

+ with gr.Accordion("上传参考音频", open=False, elem_classes="accordion"):

+ gr.Markdown("上传3-10秒清晰人声作为参考音频")

+ ref_audio_v3 = gr.Audio(

+ type="filepath",

+ label="上传参考音频",

+ elem_classes="audio-upload"

+ )

+ ref_text_v3 = gr.Textbox(

+ lines=2,

+ placeholder="参考音频对应的文本...",

+ label="参考文本"

+ )

+

+ gr.Markdown("""

+ 模型说明: 此模型支持多个语种,无需参考音频即可生成自然语音。

+使用技巧: 输入文本语言应与选择的说话人语言一致以获得最佳效果。

+

+

+ """)

+

+ with gr.Column(scale=1, elem_classes="output-section"):

+ gr.Markdown("### 输出结果")

+ tts_v3_output = gr.Audio(

+ type="filepath",

+ label="生成语音",

+ interactive=False

+ )

+ tts_v3_button = gr.Button(

+ "🚀 生成语音",

+ variant="primary",

+ elem_classes="btn-generate"

+ )

+ gr.Examples(

+ examples=[

+ ["这真是太令人兴奋了!我们刚刚完成了一个重大突破!", "Happy", "徐志胜"],

+ ["我简直不敢相信!这怎么可能发生?", "Surprise", "李雪琴"],

+ ["这太让人失望了,我们所有的努力都白费了。", "Sad", "范志毅"]

+ ],

+ inputs=[tts_text_v3, emotion_v3, speaker_v3],

+ label="情感示例"

+ )

+

+ # 页脚

+ gr.Markdown("""

+

+ """)

+

+ # 绑定事件 # tts_text, speed, speaker, emotion, ref_audio, ref_text

+ tts_base_button.click(

+ fn=generate_speech_base,

+ inputs=[tts_text_v1, speed_v1, speaker_v1, ref_audio_v1, ref_text_v1],

+ outputs=tts_base_output

+ )

+

+ tts_sft_button.click(

+ fn=generate_speech_sft,

+ inputs=[tts_text_sft, speaker_sft],

+ outputs=tts_sft_output

+ )

+ # tts_text, speed, speaker, key, ref_audio, ref_text

+ tts_v3_button.click(

+ fn=generate_speech_speakerminus,

+ inputs=[tts_text_v3, speed_v3, speaker_v3, emotion_v3, ref_audio_v3, ref_text_v3],

+ outputs=tts_v3_output

+ )

+

+if __name__ == "__main__":

+ demo.launch(

+ server_name="0.0.0.0",

+ server_port=10163,

+ share=True,

+ favicon_path="/mnt/by079416/fengping/CosyVoice2/logo.png"

+ )

\ No newline at end of file

diff --git a/gradio_test.py b/gradio_test.py

new file mode 100644

index 0000000000000000000000000000000000000000..5651f21989969ec2993525248565f666f268cad0

--- /dev/null

+++ b/gradio_test.py

@@ -0,0 +1,249 @@

+import gradio as gr

+import sys, os

+import torch

+from cosyvoice.utils.file_utils import load_wav

+from tts_model.base_model.cosyvoice import CosyVoice as CosyVoiceTTS_base

+from tts_model.speaker_minus.cosyvoice import CosyVoice as CosyVoiceTTS_speakerminus

+# from tts_model.model_cosy2_instruct import CosyVoiceTTS as CosyVoiceTTS_cosy2

+from pydub import AudioSegment

+import tempfile

+import soundfile as sf

+import subprocess

+import numpy as np

+import random

+

+

+from pydub import AudioSegment

+# AudioSegment.converter = "/mnt/by079416/fengping/ffmpeg-7.0.2-amd64-static/ffmpeg"

+# AudioSegment.ffprobe = "/mnt/by079416/fengping/ffmpeg-7.0.2-amd64-static/ffprobe"

+

+ffmpeg_path = os.path.expanduser("/mnt/by079416/fengping/ffmpeg-7.0.2-amd64-static/ffmpeg/")

+os.environ["PATH"] += os.pathsep + ffmpeg_path

+

+sys.path.append('third_party/Matcha-TTS')

+os.system('export PYTHONPATH=third_party/Matcha-TTS')

+

+tts_base = CosyVoiceTTS_base(model_dir="./pretrained_models/CosyVoice-300M/")

+tts_speakerminus = CosyVoiceTTS_speakerminus(model_dir="./pretrained_models/CosyVoice-300M-speakerminus/")

+# tts_cosy2_instruct = CosyVoiceTTS_cosy2(model_path="./pretrained_models/CosyVoice-300M-Instruct_cosy2/")

+

+text_prompt = {

+"翟佳宁": "这个节目就是把四个男嘉宾,四个女嘉宾放一个大别墅里让他们朝夕相处一整个月,月末选择心动的彼此。",

+"范志毅": "没这个能力知道吗,我已经说了,你像这样的比赛本身就没有打好基础。",

+"呼兰": "发完之后那个工作人员说,老师,呼兰老师你还要再加个标签儿,我说加什么标签儿,他说你就加一个呼兰太好笑了。",

+"江梓浩": "就是很多我们这帮演员一整年也就上这么一个脱口秀类型的节目。",

+"李雪琴": "我就劝他,我说你呀,你没事儿也放松放松,你那身体都亮红灯儿了你还不注意。",

+"刘旸": "比如这三年我在街上开车,会在开车的时候进行一些哲思,我有天开车的时候路过一个地方。",

+"唐香玉": "大家好我叫唐香玉, 我年前把我的工作辞了,成了一个全职脱口秀演员。",

+"小鹿": "然后我就老家的亲戚太多了,我也记不清谁该叫谁,所以我妈带着我和我。",

+"于祥宇": "我大学专业学的是哲学,然后节目组就说那这期主题你可以刚好聊一下哲学专业毕业之后的就业方向。",

+"赵晓卉": "终于没有人问我为什么不辞职了,结果谈到现在,谈恋爱第一天人家问我,能打个电话吗?我说你有啥事儿。",

+"徐志胜": "最舒服的一个方式,这个舞台也不一定就是说是来第一年就好嘛,只要你坚持,肯定会有发光发热的那天嘛。"

+}

+audio_prompt = {

+ "翟佳宁": "zhaijianing",

+ "范志毅": "fanzhiyi",

+ "呼兰": "hulan",

+ "江梓浩": "jiangzhihao",

+ "李雪琴": "lixueqin",

+ "刘旸": "liuchang",

+ "唐香玉": "tangxiangyu",

+ "小鹿": "xiaolu",

+ "于祥宇": "yuxiangyu",

+ "赵晓卉": "zhaoxiaohui",

+ "徐志胜": "xuzhisheng"

+}

+audio_prompt_path = "/mnt/by079416/fengping/CosyVoice2/talk_show_prompt/"

+

+def load_audio_and_convert_to_16bit(file_path, target_sample_rate=16000):

+ audio = AudioSegment.from_file(file_path)

+

+ if audio.channels > 1:

+ audio = audio.set_channels(1)

+

+ if audio.frame_rate != target_sample_rate:

+ audio = audio.set_frame_rate(target_sample_rate)

+

+ audio_data = np.array(audio.get_array_of_samples(), dtype=np.float32)

+

+ audio_data = audio_data / np.max(np.abs(audio_data))

+

+ audio_data = (audio_data * 32767).astype(np.int16)

+

+ return torch.tensor(audio_data), target_sample_rate

+

+def convert_audio_with_sox(input_file, output_file, target_sample_rate=16000):

+ try:

+ command = [

+ 'sox', input_file,

+ '-r', str(target_sample_rate),

+ '-b', '16',

+ '-c', '1',

+ output_file

+ ]

+

+ subprocess.run(command, check=True)

+ print(f"Audio converted successfully: {output_file}")

+ except subprocess.CalledProcessError as e:

+ print(f"Error during conversion: {e}")

+

+def generate_speech_base(tts_text, speed, speaker, emotion, ref_audio, ref_text):

+ if not ref_audio and not ref_text:

+ ref_text = text_prompt.get(speaker, "")

+ ref_audio = os.path.join(audio_prompt_path, f"{audio_prompt.get(speaker)}.wav")

+ else:

+ random_int = random.randint(0, 90)

+ soxsed_ref_audio = "/tmp/{random_int}_ref.wav"

+ convert_audio_with_sox(ref_audio, soxsed_ref_audio)

+ ref_audio = load_wav(ref_audio, 16000)

+ # ref_audio, target_sample_rate = load_audio_and_convert_to_16bit(ref_audio)

+ sample_rate, full_audio = tts_base.inference_zero_shot(

+ tts_text,

+ prompt_text = ref_text,

+ prompt_speech_16k = ref_audio,

+ speed=speed,

+ # speaker=speaker,

+ # emotion=emotion,

+

+ )

+ print("sample_rate:", sample_rate, "full_audio:", full_audio.min(), full_audio.max())

+ with tempfile.NamedTemporaryFile(delete=False, suffix=".wav") as temp_audio_file:

+ output_audio_path = temp_audio_file.name

+ audio_segment = AudioSegment(

+ full_audio.tobytes(),

+ frame_rate=sample_rate,

+ sample_width=full_audio.dtype.itemsize,

+ channels=1

+ )

+ audio_segment.export(output_audio_path, format="wav")

+ print(f"Audio saved to {output_audio_path}")

+

+ return output_audio_path

+

+def generate_speech_speakerminus(tts_text, speed, speaker, key, ref_audio, ref_text):

+ if not ref_audio and not ref_text:

+ ref_text = text_prompt.get(speaker, "")

+ ref_audio = os.path.join(audio_prompt_path, f"{audio_prompt.get(speaker)}.wav")

+ else:

+ random_int = random.randint(0, 90)

+ soxsed_ref_audio = "/tmp/{random_int}_ref.wav"

+ convert_audio_with_sox(ref_audio, soxsed_ref_audio)

+ # print("output_file:", output_file)

+ # ref_audio, target_sample_rate = load_audio_and_convert_to_16bit(ref_audio)

+ ref_audio = load_wav(ref_audio, 16000)

+ # if key == "Surprise":

+ # emotion_info = torch.load("/mnt/by079416/surprise.pt")

+ # if key == "Sad":

+ # emotion_info = torch.load("/mnt/by079416/sad.pt")

+ # if key == "Angry":

+ # emotion_info = torch.load("/mnt/by079416/angry.pt")

+ # if key == "Happy":

+ # emotion_info = torch.load("/mnt/by079416/happy.pt")

+

+ emotion_info = torch.load("/mnt/by079416/fengping/CosyVoice2/embedding_info.pt")["0002"][key]

+ sample_rate, full_audio = tts_speakerminus.inference_zero_shot(

+ tts_text,

+ prompt_text = ref_text,

+ # speaker=speaker,

+ prompt_speech_16k = ref_audio,

+ key = key,

+ emotion_speakerminus=emotion_info,

+ # ref_audio = ref_audio,

+ speed=speed

+

+ )

+ print("sample_rate:", sample_rate, "full_audio:", full_audio.min(), full_audio.max())

+ with tempfile.NamedTemporaryFile(delete=False, suffix=".wav") as temp_audio_file:

+ output_audio_path = temp_audio_file.name

+ audio_segment = AudioSegment(

+ full_audio.tobytes(),

+ frame_rate=sample_rate,

+ sample_width=full_audio.dtype.itemsize,

+ channels=1

+ )

+ audio_segment.export(output_audio_path, format="wav")

+ print(f"Audio saved to {output_audio_path}")

+ return output_audio_path

+

+names = [

+ "于祥宇",

+ "刘旸",

+ "呼兰",

+ "唐香玉",

+ "小鹿",

+ "李雪琴",

+ "江梓浩",

+ "翟佳宁",

+ "范志毅",

+ "赵晓卉",

+ "徐志胜"

+]

+

+with gr.Blocks() as demo:

+ gr.Markdown("base model and instruct model")

+ # base

+ with gr.Tab("TTS-v1"):

+ gr.Markdown("## base model testing ##")

+ tts_base_inputs = [

+ gr.Textbox(lines=2, placeholder="Enter text here...", label="Input Text"),

+ gr.Slider(minimum=0.5, maximum=2.0, value=1.0, step=0.1, label="speed", info="Adjust speech rate (0.5x to 2.0x)"),

+ gr.Radio(choices=names, value="徐志胜", label="可选说话人", info="选择语音说话人"),

+ gr.Radio(choices=["peace"], value="peace", label="情感", info="选择情感风格"),

+ gr.Audio(type="filepath", label="输入音频"),

+ gr.Textbox(lines=2, placeholder="Enter audio corresponding text here...", label="音频对应的文本")

+ ]

+ tts_base_output = gr.Audio(type="filepath", label="生成的语音")

+ tts_base_button = gr.Button("生成语音")

+ tts_base_button.click(

+ fn=generate_speech_base,

+ inputs=tts_base_inputs,

+ outputs=tts_base_output

+ )

+

+ # with gr.Tab("TTS-v2"):

+ # gr.Markdown("## base model testing ##")

+ # tts_base_inputs = [

+ # gr.Textbox(lines=2, placeholder="Enter text here...", label="Input Text"),

+ # gr.Slider(minimum=0.5, maximum=2.0, value=1.0, step=0.1, label="speed", info="Adjust speech rate (0.5x to 2.0x)"),

+ # gr.Radio(choices=names, value="徐志胜", label="说话人", info="选择语音说话人"),

+ # gr.Radio(choices=["peace", "excited", "mixed"], value="peace", label="情感", info="选择情感风格"),

+ # gr.Textbox(lines=2, placeholder="Enter your instruct text here...", label="Input Text"),

+ # gr.Audio(type="filepath", label="输入音频"),

+ # gr.Textbox(lines=2, placeholder="Enter audio corresponding text here...", label="音频对应的文本")

+ # ]

+ # tts_base_output = gr.Audio(type="filepath", label="生成的语音")

+ # tts_base_button = gr.Button("生成语音")

+ # tts_base_button.click(

+ # fn=generate_speech_cosy2_instruct,

+ # inputs=tts_base_inputs,

+ # outputs=tts_base_output

+ # )

+

+ # # instruct

+

+ # def generate_speech_speakerminus(tts_text, speed, speaker, emotion_speakerminus, key, ref_audio, ref_text):

+

+ with gr.Tab("TTS-v3"):

+ gr.Markdown("## instruct model testing")

+ tts2_inputs = [

+ gr.Textbox(lines=2, placeholder="输入文本...", label="输入文本"),

+ gr.Slider(minimum=0.5, maximum=2.0, value=1.0, step=0.1, label="语速", info="调整语速 (0.5x 到 2.0x)"),

+ gr.Radio(choices=names, value="徐志胜", label="可选说话人", info="选择语音说话人"),

+ gr.Radio(choices=["Angry", "Happy", "Surprise", "Sad"], value="peace", label="情感", info="选择情感风格"),

+ gr.Audio(type="filepath", label="输入音频"),

+ gr.Textbox(lines=2, placeholder="Enter audio corresponding text here...", label="音频对应的文本")

+ ]

+ tts2_output = gr.Audio(type="filepath", label="生成的语音")

+ tts2_button = gr.Button("生成语音")

+ tts2_button.click(

+ fn=generate_speech_speakerminus,

+ inputs=tts2_inputs,

+ outputs=tts2_output

+ )

+

+if __name__ == "__main__":

+ demo.launch(

+ server_name="0.0.0.0",

+ server_port=10132,

+ share=False

+ )

\ No newline at end of file

diff --git a/normal_infer.py b/normal_infer.py

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/requirements.txt b/requirements.txt

new file mode 100644

index 0000000000000000000000000000000000000000..695fdbcf392fff144f29f3688acc28d6bf604289

--- /dev/null

+++ b/requirements.txt

@@ -0,0 +1,39 @@

+--extra-index-url https://download.pytorch.org/whl/cu121

+--extra-index-url https://aiinfra.pkgs.visualstudio.com/PublicPackages/_packaging/onnxruntime-cuda-12/pypi/simple/ # https://github.com/microsoft/onnxruntime/issues/21684

+conformer==0.3.2

+deepspeed==0.14.2; sys_platform == 'linux'

+diffusers==0.29.0

+gdown==5.1.0

+# gradio==5.4.0

+grpcio==1.57.0

+grpcio-tools==1.57.0

+hydra-core==1.3.2

+HyperPyYAML==1.2.2

+inflect==7.3.1

+librosa==0.10.2

+lightning==2.2.4

+matplotlib==3.7.5

+modelscope==1.15.0

+networkx==3.1

+omegaconf==2.3.0

+onnx==1.16.0

+onnxruntime-gpu==1.18.0; sys_platform == 'linux'

+onnxruntime==1.18.0; sys_platform == 'darwin' or sys_platform == 'win32'

+openai-whisper==20231117

+protobuf==4.25

+pydantic==2.7.0

+pyworld==0.3.4

+rich==13.7.1

+soundfile==0.12.1

+tensorboard==2.14.0

+tensorrt-cu12==10.0.1; sys_platform == 'linux'

+tensorrt-cu12-bindings==10.0.1; sys_platform == 'linux'

+tensorrt-cu12-libs==10.0.1; sys_platform == 'linux'

+torch==2.3.1

+torchaudio==2.3.1

+transformers==4.40.1

+uvicorn==0.30.0

+wget==3.2

+fastapi==0.115.6

+fastapi-cli==0.0.4

+WeTextProcessing==1.0.3

diff --git a/runtime/python/Dockerfile b/runtime/python/Dockerfile

new file mode 100644

index 0000000000000000000000000000000000000000..6b5e9442bfabd2c2238fb8ba32ea387a140d2cb8

--- /dev/null

+++ b/runtime/python/Dockerfile

@@ -0,0 +1,13 @@

+FROM pytorch/pytorch:2.0.1-cuda11.7-cudnn8-runtime

+ENV DEBIAN_FRONTEND=noninteractive

+

+WORKDIR /opt/CosyVoice

+

+RUN sed -i s@/archive.ubuntu.com/@/mirrors.aliyun.com/@g /etc/apt/sources.list

+RUN apt-get update -y

+RUN apt-get -y install git unzip git-lfs

+RUN git lfs install

+RUN git clone --recursive https://github.com/FunAudioLLM/CosyVoice.git

+# here we use python==3.10 because we cannot find an image which have both python3.8 and torch2.0.1-cu118 installed

+RUN cd CosyVoice && pip3 install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/ --trusted-host=mirrors.aliyun.com

+RUN cd CosyVoice/runtime/python/grpc && python3 -m grpc_tools.protoc -I. --python_out=. --grpc_python_out=. cosyvoice.proto

\ No newline at end of file

diff --git a/runtime/python/fastapi/client.py b/runtime/python/fastapi/client.py

new file mode 100644

index 0000000000000000000000000000000000000000..0fb29b76ff0a374fa1f1b457c6067c48ef5036a9

--- /dev/null

+++ b/runtime/python/fastapi/client.py

@@ -0,0 +1,92 @@

+# Copyright (c) 2024 Alibaba Inc (authors: Xiang Lyu)

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import argparse

+import logging

+import requests

+import torch

+import torchaudio

+import numpy as np

+

+

+def main():

+ url = "http://{}:{}/inference_{}".format(args.host, args.port, args.mode)

+ if args.mode == 'sft':

+ payload = {

+ 'tts_text': args.tts_text,

+ 'spk_id': args.spk_id

+ }

+ response = requests.request("GET", url, data=payload, stream=True)

+ elif args.mode == 'zero_shot':

+ payload = {

+ 'tts_text': args.tts_text,

+ 'prompt_text': args.prompt_text

+ }

+ files = [('prompt_wav', ('prompt_wav', open(args.prompt_wav, 'rb'), 'application/octet-stream'))]

+ response = requests.request("GET", url, data=payload, files=files, stream=True)

+ elif args.mode == 'cross_lingual':

+ payload = {

+ 'tts_text': args.tts_text,

+ }

+ files = [('prompt_wav', ('prompt_wav', open(args.prompt_wav, 'rb'), 'application/octet-stream'))]

+ response = requests.request("GET", url, data=payload, files=files, stream=True)

+ else:

+ payload = {

+ 'tts_text': args.tts_text,

+ 'spk_id': args.spk_id,

+ 'instruct_text': args.instruct_text

+ }

+ response = requests.request("GET", url, data=payload, stream=True)

+ tts_audio = b''

+ for r in response.iter_content(chunk_size=16000):

+ tts_audio += r

+ tts_speech = torch.from_numpy(np.array(np.frombuffer(tts_audio, dtype=np.int16))).unsqueeze(dim=0)

+ logging.info('save response to {}'.format(args.tts_wav))

+ torchaudio.save(args.tts_wav, tts_speech, target_sr)

+ logging.info('get response')

+

+

+if __name__ == "__main__":

+ parser = argparse.ArgumentParser()

+ parser.add_argument('--host',

+ type=str,

+ default='0.0.0.0')

+ parser.add_argument('--port',

+ type=int,

+ default='50000')

+ parser.add_argument('--mode',

+ default='sft',

+ choices=['sft', 'zero_shot', 'cross_lingual', 'instruct'],

+ help='request mode')

+ parser.add_argument('--tts_text',

+ type=str,

+ default='你好,我是通义千问语音合成大模型,请问有什么可以帮您的吗?')

+ parser.add_argument('--spk_id',

+ type=str,

+ default='中文女')

+ parser.add_argument('--prompt_text',

+ type=str,

+ default='希望你以后能够做的比我还好呦。')

+ parser.add_argument('--prompt_wav',

+ type=str,

+ default='../../../asset/zero_shot_prompt.wav')

+ parser.add_argument('--instruct_text',

+ type=str,

+ default='Theo \'Crimson\', is a fiery, passionate rebel leader. \

+ Fights with fervor for justice, but struggles with impulsiveness.')

+ parser.add_argument('--tts_wav',

+ type=str,

+ default='demo.wav')

+ args = parser.parse_args()

+ prompt_sr, target_sr = 16000, 22050

+ main()

diff --git a/runtime/python/fastapi/server.py b/runtime/python/fastapi/server.py

new file mode 100644

index 0000000000000000000000000000000000000000..08542df4a3b30ff92d5b3133eedaf2d0a0955801

--- /dev/null

+++ b/runtime/python/fastapi/server.py

@@ -0,0 +1,101 @@

+# Copyright (c) 2024 Alibaba Inc (authors: Xiang Lyu)

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import os

+import sys

+import argparse

+import logging

+logging.getLogger('matplotlib').setLevel(logging.WARNING)

+from fastapi import FastAPI, UploadFile, Form, File

+from fastapi.responses import StreamingResponse

+from fastapi.middleware.cors import CORSMiddleware

+import uvicorn

+import numpy as np

+ROOT_DIR = os.path.dirname(os.path.abspath(__file__))

+sys.path.append('{}/../../..'.format(ROOT_DIR))

+sys.path.append('{}/../../../third_party/Matcha-TTS'.format(ROOT_DIR))

+from cosyvoice.cli.cosyvoice import CosyVoice, CosyVoice2

+from cosyvoice.utils.file_utils import load_wav

+

+app = FastAPI()

+# set cross region allowance

+app.add_middleware(

+ CORSMiddleware,

+ allow_origins=["*"],

+ allow_credentials=True,

+ allow_methods=["*"],

+ allow_headers=["*"])

+

+

+def generate_data(model_output):

+ for i in model_output:

+ tts_audio = (i['tts_speech'].numpy() * (2 ** 15)).astype(np.int16).tobytes()

+ yield tts_audio

+

+

+@app.get("/inference_sft")

+@app.post("/inference_sft")

+async def inference_sft(tts_text: str = Form(), spk_id: str = Form()):

+ model_output = cosyvoice.inference_sft(tts_text, spk_id)

+ return StreamingResponse(generate_data(model_output))

+

+

+@app.get("/inference_zero_shot")

+@app.post("/inference_zero_shot")

+async def inference_zero_shot(tts_text: str = Form(), prompt_text: str = Form(), prompt_wav: UploadFile = File()):

+ prompt_speech_16k = load_wav(prompt_wav.file, 16000)

+ model_output = cosyvoice.inference_zero_shot(tts_text, prompt_text, prompt_speech_16k)

+ return StreamingResponse(generate_data(model_output))

+

+

+@app.get("/inference_cross_lingual")

+@app.post("/inference_cross_lingual")

+async def inference_cross_lingual(tts_text: str = Form(), prompt_wav: UploadFile = File()):

+ prompt_speech_16k = load_wav(prompt_wav.file, 16000)

+ model_output = cosyvoice.inference_cross_lingual(tts_text, prompt_speech_16k)

+ return StreamingResponse(generate_data(model_output))

+

+

+@app.get("/inference_instruct")

+@app.post("/inference_instruct")

+async def inference_instruct(tts_text: str = Form(), spk_id: str = Form(), instruct_text: str = Form()):

+ model_output = cosyvoice.inference_instruct(tts_text, spk_id, instruct_text)

+ return StreamingResponse(generate_data(model_output))

+

+@app.get("/inference_instruct2")

+@app.post("/inference_instruct2")

+async def inference_instruct2(tts_text: str = Form(), instruct_text: str = Form(), prompt_wav: UploadFile = File()):

+ prompt_speech_16k = load_wav(prompt_wav.file, 16000)

+ model_output = cosyvoice.inference_instruct2(tts_text, instruct_text, prompt_speech_16k)

+ return StreamingResponse(generate_data(model_output))

+

+

+

+if __name__ == '__main__':

+ parser = argparse.ArgumentParser()

+ parser.add_argument('--port',

+ type=int,

+ default=50000)

+ parser.add_argument('--model_dir',

+ type=str,

+ default='iic/CosyVoice-300M',

+ help='local path or modelscope repo id')

+ args = parser.parse_args()

+ try:

+ cosyvoice = CosyVoice(args.model_dir)

+ except Exception:

+ try:

+ cosyvoice = CosyVoice2(args.model_dir)

+ except Exception:

+ raise TypeError('no valid model_type!')

+ uvicorn.run(app, host="0.0.0.0", port=args.port)

\ No newline at end of file

diff --git a/runtime/python/grpc/client.py b/runtime/python/grpc/client.py

new file mode 100644

index 0000000000000000000000000000000000000000..9885130aaf6453ccbc9c7addc9906a6720d18977

--- /dev/null

+++ b/runtime/python/grpc/client.py

@@ -0,0 +1,106 @@

+# Copyright (c) 2024 Alibaba Inc (authors: Xiang Lyu)

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import os

+import sys

+ROOT_DIR = os.path.dirname(os.path.abspath(__file__))

+sys.path.append('{}/../../..'.format(ROOT_DIR))

+sys.path.append('{}/../../../third_party/Matcha-TTS'.format(ROOT_DIR))

+import logging

+import argparse

+import torchaudio

+import cosyvoice_pb2

+import cosyvoice_pb2_grpc

+import grpc

+import torch

+import numpy as np

+from cosyvoice.utils.file_utils import load_wav

+

+

+def main():

+ with grpc.insecure_channel("{}:{}".format(args.host, args.port)) as channel:

+ stub = cosyvoice_pb2_grpc.CosyVoiceStub(channel)

+ request = cosyvoice_pb2.Request()

+ if args.mode == 'sft':

+ logging.info('send sft request')

+ sft_request = cosyvoice_pb2.sftRequest()

+ sft_request.spk_id = args.spk_id

+ sft_request.tts_text = args.tts_text

+ request.sft_request.CopyFrom(sft_request)

+ elif args.mode == 'zero_shot':

+ logging.info('send zero_shot request')

+ zero_shot_request = cosyvoice_pb2.zeroshotRequest()

+ zero_shot_request.tts_text = args.tts_text

+ zero_shot_request.prompt_text = args.prompt_text

+ prompt_speech = load_wav(args.prompt_wav, 16000)

+ zero_shot_request.prompt_audio = (prompt_speech.numpy() * (2**15)).astype(np.int16).tobytes()

+ request.zero_shot_request.CopyFrom(zero_shot_request)

+ elif args.mode == 'cross_lingual':

+ logging.info('send cross_lingual request')

+ cross_lingual_request = cosyvoice_pb2.crosslingualRequest()

+ cross_lingual_request.tts_text = args.tts_text

+ prompt_speech = load_wav(args.prompt_wav, 16000)

+ cross_lingual_request.prompt_audio = (prompt_speech.numpy() * (2**15)).astype(np.int16).tobytes()

+ request.cross_lingual_request.CopyFrom(cross_lingual_request)

+ else:

+ logging.info('send instruct request')

+ instruct_request = cosyvoice_pb2.instructRequest()

+ instruct_request.tts_text = args.tts_text

+ instruct_request.spk_id = args.spk_id

+ instruct_request.instruct_text = args.instruct_text

+ request.instruct_request.CopyFrom(instruct_request)

+

+ response = stub.Inference(request)

+ tts_audio = b''

+ for r in response:

+ tts_audio += r.tts_audio

+ tts_speech = torch.from_numpy(np.array(np.frombuffer(tts_audio, dtype=np.int16))).unsqueeze(dim=0)

+ logging.info('save response to {}'.format(args.tts_wav))

+ torchaudio.save(args.tts_wav, tts_speech, target_sr)

+ logging.info('get response')

+

+

+if __name__ == "__main__":

+ parser = argparse.ArgumentParser()

+ parser.add_argument('--host',

+ type=str,

+ default='0.0.0.0')

+ parser.add_argument('--port',

+ type=int,

+ default='50000')

+ parser.add_argument('--mode',

+ default='sft',

+ choices=['sft', 'zero_shot', 'cross_lingual', 'instruct'],

+ help='request mode')

+ parser.add_argument('--tts_text',

+ type=str,

+ default='你好,我是通义千问语音合成大模型,请问有什么可以帮您的吗?')

+ parser.add_argument('--spk_id',

+ type=str,

+ default='中文女')

+ parser.add_argument('--prompt_text',

+ type=str,

+ default='希望你以后能够做的比我还好呦。')

+ parser.add_argument('--prompt_wav',

+ type=str,

+ default='../../../asset/zero_shot_prompt.wav')

+ parser.add_argument('--instruct_text',

+ type=str,

+ default='Theo \'Crimson\', is a fiery, passionate rebel leader. \

+ Fights with fervor for justice, but struggles with impulsiveness.')

+ parser.add_argument('--tts_wav',

+ type=str,

+ default='demo.wav')

+ args = parser.parse_args()

+ prompt_sr, target_sr = 16000, 22050

+ main()

diff --git a/runtime/python/grpc/cosyvoice.proto b/runtime/python/grpc/cosyvoice.proto

new file mode 100644

index 0000000000000000000000000000000000000000..fe0c3ad242326813b7e925c66a2d9b09567f548c

--- /dev/null

+++ b/runtime/python/grpc/cosyvoice.proto

@@ -0,0 +1,43 @@

+syntax = "proto3";

+

+package cosyvoice;

+option go_package = "protos/";

+

+service CosyVoice{

+ rpc Inference(Request) returns (stream Response) {}

+}

+

+message Request{

+ oneof RequestPayload {

+ sftRequest sft_request = 1;

+ zeroshotRequest zero_shot_request = 2;

+ crosslingualRequest cross_lingual_request = 3;

+ instructRequest instruct_request = 4;

+ }

+}

+

+message sftRequest{

+ string spk_id = 1;

+ string tts_text = 2;

+}

+

+message zeroshotRequest{

+ string tts_text = 1;

+ string prompt_text = 2;

+ bytes prompt_audio = 3;

+}

+

+message crosslingualRequest{

+ string tts_text = 1;

+ bytes prompt_audio = 2;

+}

+

+message instructRequest{

+ string tts_text = 1;

+ string spk_id = 2;

+ string instruct_text = 3;

+}

+

+message Response{

+ bytes tts_audio = 1;

+}

\ No newline at end of file

diff --git a/runtime/python/grpc/server.py b/runtime/python/grpc/server.py

new file mode 100644

index 0000000000000000000000000000000000000000..1cb48ae557c38196dcfda3ebef847350a7665220

--- /dev/null

+++ b/runtime/python/grpc/server.py

@@ -0,0 +1,96 @@

+# Copyright (c) 2024 Alibaba Inc (authors: Xiang Lyu)

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import os

+import sys

+from concurrent import futures

+import argparse

+import cosyvoice_pb2

+import cosyvoice_pb2_grpc

+import logging

+logging.getLogger('matplotlib').setLevel(logging.WARNING)

+import grpc

+import torch

+import numpy as np

+ROOT_DIR = os.path.dirname(os.path.abspath(__file__))

+sys.path.append('{}/../../..'.format(ROOT_DIR))

+sys.path.append('{}/../../../third_party/Matcha-TTS'.format(ROOT_DIR))

+from cosyvoice.cli.cosyvoice import CosyVoice, CosyVoice2

+

+logging.basicConfig(level=logging.DEBUG,

+ format='%(asctime)s %(levelname)s %(message)s')

+

+

+class CosyVoiceServiceImpl(cosyvoice_pb2_grpc.CosyVoiceServicer):

+ def __init__(self, args):

+ try:

+ self.cosyvoice = CosyVoice(args.model_dir)

+ except Exception:

+ try:

+ self.cosyvoice = CosyVoice2(args.model_dir)

+ except Exception:

+ raise TypeError('no valid model_type!')

+ logging.info('grpc service initialized')

+

+ def Inference(self, request, context):

+ if request.HasField('sft_request'):

+ logging.info('get sft inference request')

+ model_output = self.cosyvoice.inference_sft(request.sft_request.tts_text, request.sft_request.spk_id)

+ elif request.HasField('zero_shot_request'):

+ logging.info('get zero_shot inference request')

+ prompt_speech_16k = torch.from_numpy(np.array(np.frombuffer(request.zero_shot_request.prompt_audio, dtype=np.int16))).unsqueeze(dim=0)

+ prompt_speech_16k = prompt_speech_16k.float() / (2**15)

+ model_output = self.cosyvoice.inference_zero_shot(request.zero_shot_request.tts_text,

+ request.zero_shot_request.prompt_text,

+ prompt_speech_16k)

+ elif request.HasField('cross_lingual_request'):

+ logging.info('get cross_lingual inference request')

+ prompt_speech_16k = torch.from_numpy(np.array(np.frombuffer(request.cross_lingual_request.prompt_audio, dtype=np.int16))).unsqueeze(dim=0)

+ prompt_speech_16k = prompt_speech_16k.float() / (2**15)

+ model_output = self.cosyvoice.inference_cross_lingual(request.cross_lingual_request.tts_text, prompt_speech_16k)

+ else:

+ logging.info('get instruct inference request')

+ model_output = self.cosyvoice.inference_instruct(request.instruct_request.tts_text,

+ request.instruct_request.spk_id,

+ request.instruct_request.instruct_text)

+

+ logging.info('send inference response')

+ for i in model_output:

+ response = cosyvoice_pb2.Response()

+ response.tts_audio = (i['tts_speech'].numpy() * (2 ** 15)).astype(np.int16).tobytes()

+ yield response

+

+

+def main():

+ grpcServer = grpc.server(futures.ThreadPoolExecutor(max_workers=args.max_conc), maximum_concurrent_rpcs=args.max_conc)

+ cosyvoice_pb2_grpc.add_CosyVoiceServicer_to_server(CosyVoiceServiceImpl(args), grpcServer)

+ grpcServer.add_insecure_port('0.0.0.0:{}'.format(args.port))

+ grpcServer.start()

+ logging.info("server listening on 0.0.0.0:{}".format(args.port))

+ grpcServer.wait_for_termination()

+

+

+if __name__ == '__main__':

+ parser = argparse.ArgumentParser()

+ parser.add_argument('--port',

+ type=int,

+ default=50000)

+ parser.add_argument('--max_conc',

+ type=int,

+ default=4)

+ parser.add_argument('--model_dir',

+ type=str,

+ default='iic/CosyVoice-300M',

+ help='local path or modelscope repo id')

+ args = parser.parse_args()

+ main()

diff --git a/third_party/Matcha-TTS/.env.example b/third_party/Matcha-TTS/.env.example

new file mode 100644

index 0000000000000000000000000000000000000000..a790e320464ebc778ca07f5bcd826a9c8412ed0e

--- /dev/null

+++ b/third_party/Matcha-TTS/.env.example

@@ -0,0 +1,6 @@

+# example of file for storing private and user specific environment variables, like keys or system paths

+# rename it to ".env" (excluded from version control by default)

+# .env is loaded by train.py automatically

+# hydra allows you to reference variables in .yaml configs with special syntax: ${oc.env:MY_VAR}

+

+MY_VAR="/home/user/my/system/path"

diff --git a/third_party/Matcha-TTS/.github/PULL_REQUEST_TEMPLATE.md b/third_party/Matcha-TTS/.github/PULL_REQUEST_TEMPLATE.md

new file mode 100644

index 0000000000000000000000000000000000000000..410bcd87a45297ab8f0d369574a032858b6b1811

--- /dev/null

+++ b/third_party/Matcha-TTS/.github/PULL_REQUEST_TEMPLATE.md

@@ -0,0 +1,22 @@

+## What does this PR do?

+

+

+

+Fixes #\模型说明: 此模型在音色克隆基础上增加了情感控制能力,可生成带有特定情感的语音。

+使用技巧: 情感表达效果与文本内容相关,请确保文本与所选情感匹配。

+

+

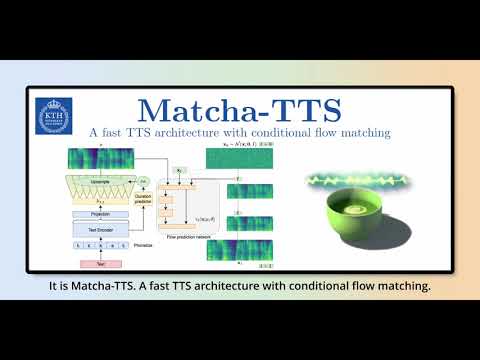

+# 🍵 Matcha-TTS: A fast TTS architecture with conditional flow matching

+

+### [Shivam Mehta](https://www.kth.se/profile/smehta), [Ruibo Tu](https://www.kth.se/profile/ruibo), [Jonas Beskow](https://www.kth.se/profile/beskow), [Éva Székely](https://www.kth.se/profile/szekely), and [Gustav Eje Henter](https://people.kth.se/~ghe/)

+

+[](https://www.python.org/downloads/release/python-3100/)

+[](https://pytorch.org/get-started/locally/)

+[](https://pytorchlightning.ai/)

+[](https://hydra.cc/)

+[](https://black.readthedocs.io/en/stable/)

+[](https://pycqa.github.io/isort/)

+

+

+

+> This is the official code implementation of 🍵 Matcha-TTS [ICASSP 2024].

+

+We propose 🍵 Matcha-TTS, a new approach to non-autoregressive neural TTS, that uses [conditional flow matching](https://arxiv.org/abs/2210.02747) (similar to [rectified flows](https://arxiv.org/abs/2209.03003)) to speed up ODE-based speech synthesis. Our method:

+

+- Is probabilistic

+- Has compact memory footprint

+- Sounds highly natural

+- Is very fast to synthesise from

+

+Check out our [demo page](https://shivammehta25.github.io/Matcha-TTS) and read [our ICASSP 2024 paper](https://arxiv.org/abs/2309.03199) for more details.

+

+[Pre-trained models](https://drive.google.com/drive/folders/17C_gYgEHOxI5ZypcfE_k1piKCtyR0isJ?usp=sharing) will be automatically downloaded with the CLI or gradio interface.

+

+You can also [try 🍵 Matcha-TTS in your browser on HuggingFace 🤗 spaces](https://huggingface.co/spaces/shivammehta25/Matcha-TTS).

+

+## Teaser video

+

+[](https://youtu.be/xmvJkz3bqw0)

+

+## Installation

+

+1. Create an environment (suggested but optional)

+

+```

+conda create -n matcha-tts python=3.10 -y

+conda activate matcha-tts

+```

+

+2. Install Matcha TTS using pip or from source

+

+```bash

+pip install matcha-tts

+```

+

+from source

+

+```bash

+pip install git+https://github.com/shivammehta25/Matcha-TTS.git

+cd Matcha-TTS

+pip install -e .

+```

+

+3. Run CLI / gradio app / jupyter notebook

+

+```bash

+# This will download the required models

+matcha-tts --text ""

+```

+

+or

+

+```bash

+matcha-tts-app

+```

+

+or open `synthesis.ipynb` on jupyter notebook

+

+### CLI Arguments

+

+- To synthesise from given text, run:

+

+```bash

+matcha-tts --text ""

+```

+

+- To synthesise from a file, run:

+

+```bash

+matcha-tts --file

+  +

+