Qwen3-4B-Instruct-2507 GGUF (ShapeLearn Quantized)

This is a GGUF-quantized version of Qwen3-4B-Instruct-2507 produced with ByteShape's ShapeLearn, which learns the optimal datatype per tensor to maintain high quality even at very low bit lengths (the exclusive focus of this release).

To learn more about ShapeLearn and to see detailed benchmarks across GPUs, CPUs, and even the Raspberry Pi, please visit our

blog.

If you have questions or want to share feedback, reach us on

Reddit.

How to Pick a Model

We provide CPU and GPU optimized variants for llama.cpp:

- CPUs: KQ quantization is preferred due to GGML kernel efficiency.

- Nvidia GPUs: IQ quantization delivers faster throughput on modern architectures.

Each hardware target includes a range of models covering different size–quality tradeoffs.

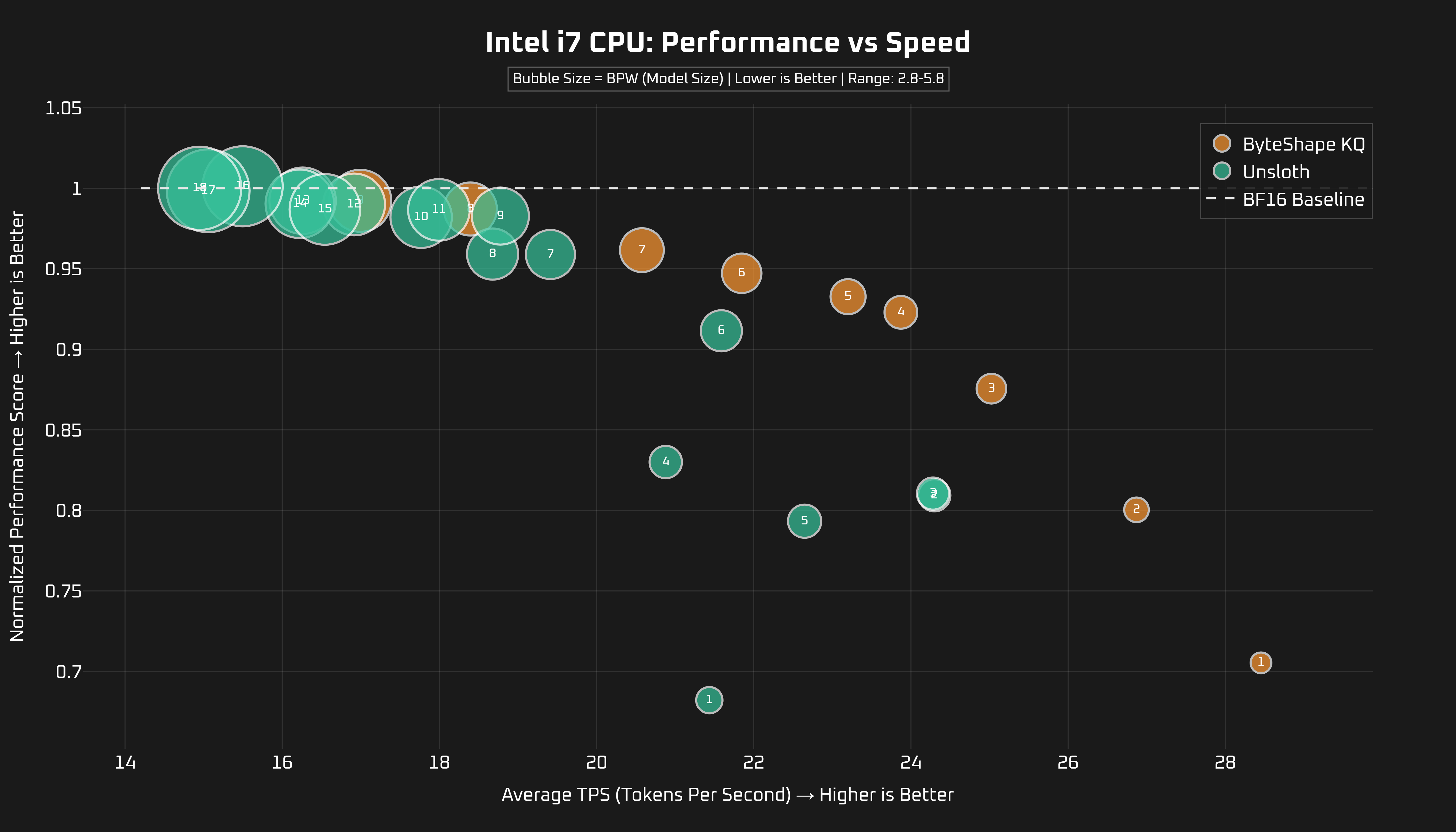

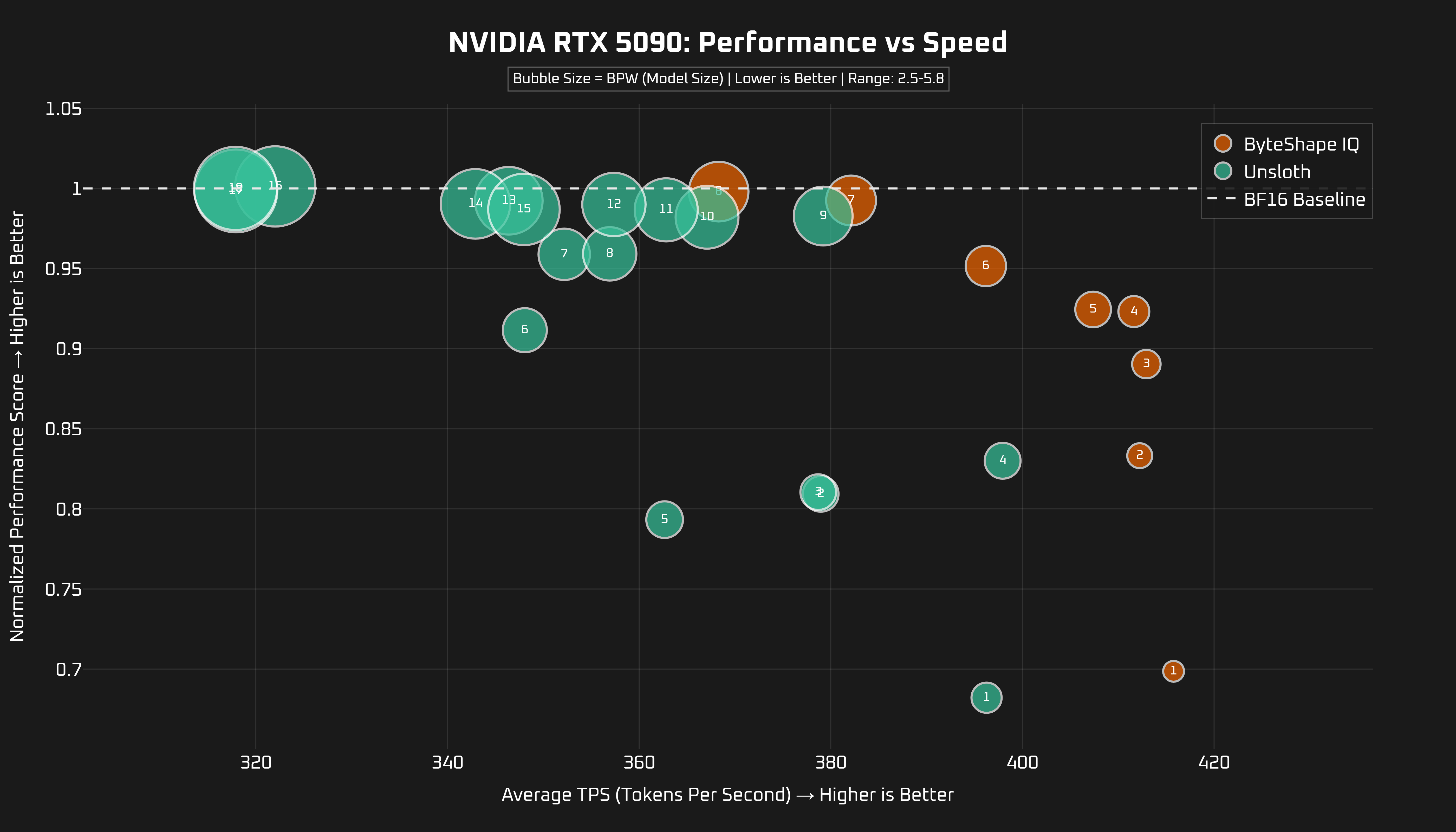

The charts below show quality vs. tokens per second for each device, comparing ShapeLearn models with Unsloth baselines.

Selection rule: Choose the model with the highest quality at your target throughput or the fastest model that still meets your required quality.

GGUF-KQ Models: Best for CPU

| Model ID | Bits/Weight | Model Size |

Normalized Quality |

|---|---|---|---|

| KQ-1 | 2.77 | 1.4 GB | 70.33% |

| KQ-2 | 2.95 | 1.49 GB | 79.81% |

| KQ-3 | 3.19 | 1.61 GB | 87.31% |

| KQ-4 | 3.34 | 1.69 GB | 92.04% |

| KQ-5 | 3.45 | 1.74 GB | 93.01% |

| KQ-6 | 3.66 | 1.84 GB | 94.46% |

| KQ-7 | 3.87 | 1.95 GB | 95.89% |

| KQ-8 | 4.31 | 2.17 GB | 98.44% |

| KQ-9 | 4.74 | 2.39 GB | 98.95% |

GGUF-IQ Models: Best for GPU

| Model ID | Bits/Weight | Model Size |

Normalized Score |

|---|---|---|---|

| IQ-1 | 2.55 | 1.29 GB | 69.87% |

| IQ-2 | 2.76 | 1.39 GB | 83.32% |

| IQ-3 | 2.94 | 1.49 GB | 89.04% |

| IQ-4 | 3.07 | 1.55 GB | 92.32% |

| IQ-5 | 3.31 | 1.67 GB | 92.45% |

| IQ-6 | 3.55 | 1.79 GB | 95.16% |

| IQ-7 | 4.04 | 2.04 GB | 99.25% |

| IQ-8 | 4.54 | 2.29 GB | 99.80% |

- Downloads last month

- 2,414

2-bit

3-bit

4-bit

5-bit

Model tree for byteshape/Qwen3-4B-Instruct-2507-GGUF

Base model

Qwen/Qwen3-4B-Instruct-2507