text

stringlengths 0

2k

| heading1

stringlengths 4

79

| source_page_url

stringclasses 182

values | source_page_title

stringclasses 182

values |

|---|---|---|---|

tbot that not only responds to users but also shows its sources, creating a more transparent and trustworthy interaction. See our finished Citations demo [here](https://huggingface.co/spaces/ysharma/anthropic-citations-with-gradio-metadata-key).

|

Building with Visibly Thinking LLMs

|

https://gradio.app/guides/agents-and-tool-usage

|

Chatbots - Agents And Tool Usage Guide

|

Chatbots are a popular application of large language models (LLMs). Using Gradio, you can easily build a chat application and share that with your users, or try it yourself using an intuitive UI.

This tutorial uses `gr.ChatInterface()`, which is a high-level abstraction that allows you to create your chatbot UI fast, often with a _few lines of Python_. It can be easily adapted to support multimodal chatbots, or chatbots that require further customization.

**Prerequisites**: please make sure you are using the latest version of Gradio:

```bash

$ pip install --upgrade gradio

```

|

Introduction

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

If you have a chat server serving an OpenAI-API compatible endpoint (such as Ollama), you can spin up a ChatInterface in a single line of Python. First, also run `pip install openai`. Then, with your own URL, model, and optional token:

```python

import gradio as gr

gr.load_chat("http://localhost:11434/v1/", model="llama3.2", token="***").launch()

```

Read about `gr.load_chat` in [the docs](https://www.gradio.app/docs/gradio/load_chat). If you have your own model, keep reading to see how to create an application around any chat model in Python!

|

Note for OpenAI-API compatible endpoints

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

To create a chat application with `gr.ChatInterface()`, the first thing you should do is define your **chat function**. In the simplest case, your chat function should accept two arguments: `message` and `history` (the arguments can be named anything, but must be in this order).

- `message`: a `str` representing the user's most recent message.

- `history`: a list of openai-style dictionaries with `role` and `content` keys, representing the previous conversation history. May also include additional keys representing message metadata.

The `history` would look like this:

```python

[

{"role": "user", "content": [{"type": "text", "text": "What is the capital of France?"}]},

{"role": "assistant", "content": [{"type": "text", "text": "Paris"}]}

]

```

while the next `message` would be:

```py

"And what is its largest city?"

```

Your chat function simply needs to return:

* a `str` value, which is the chatbot's response based on the chat `history` and most recent `message`, for example, in this case:

```

Paris is also the largest city.

```

Let's take a look at a few example chat functions:

**Example: a chatbot that randomly responds with yes or no**

Let's write a chat function that responds `Yes` or `No` randomly.

Here's our chat function:

```python

import random

def random_response(message, history):

return random.choice(["Yes", "No"])

```

Now, we can plug this into `gr.ChatInterface()` and call the `.launch()` method to create the web interface:

```python

import gradio as gr

gr.ChatInterface(

fn=random_response,

).launch()

```

That's it! Here's our running demo, try it out:

$demo_chatinterface_random_response

**Example: a chatbot that alternates between agreeing and disagreeing**

Of course, the previous example was very simplistic, it didn't take user input or the previous history into account! Here's another simple example showing how to incorporate a user's input as well as the history.

```python

import gradio as gr

def alternatingl

|

Defining a chat function

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

t take user input or the previous history into account! Here's another simple example showing how to incorporate a user's input as well as the history.

```python

import gradio as gr

def alternatingly_agree(message, history):

if len([h for h in history if h['role'] == "assistant"]) % 2 == 0:

return f"Yes, I do think that: {message}"

else:

return "I don't think so"

gr.ChatInterface(

fn=alternatingly_agree,

).launch()

```

We'll look at more realistic examples of chat functions in our next Guide, which shows [examples of using `gr.ChatInterface` with popular LLMs](../guides/chatinterface-examples).

|

Defining a chat function

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

In your chat function, you can use `yield` to generate a sequence of partial responses, each replacing the previous ones. This way, you'll end up with a streaming chatbot. It's that simple!

```python

import time

import gradio as gr

def slow_echo(message, history):

for i in range(len(message)):

time.sleep(0.3)

yield "You typed: " + message[: i+1]

gr.ChatInterface(

fn=slow_echo,

).launch()

```

While the response is streaming, the "Submit" button turns into a "Stop" button that can be used to stop the generator function.

Tip: Even though you are yielding the latest message at each iteration, Gradio only sends the "diff" of each message from the server to the frontend, which reduces latency and data consumption over your network.

|

Streaming chatbots

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

If you're familiar with Gradio's `gr.Interface` class, the `gr.ChatInterface` includes many of the same arguments that you can use to customize the look and feel of your Chatbot. For example, you can:

- add a title and description above your chatbot using `title` and `description` arguments.

- add a theme or custom css using `theme` and `css` arguments respectively in the `launch()` method.

- add `examples` and even enable `cache_examples`, which make your Chatbot easier for users to try it out.

- customize the chatbot (e.g. to change the height or add a placeholder) or textbox (e.g. to add a max number of characters or add a placeholder).

**Adding examples**

You can add preset examples to your `gr.ChatInterface` with the `examples` parameter, which takes a list of string examples. Any examples will appear as "buttons" within the Chatbot before any messages are sent. If you'd like to include images or other files as part of your examples, you can do so by using this dictionary format for each example instead of a string: `{"text": "What's in this image?", "files": ["cheetah.jpg"]}`. Each file will be a separate message that is added to your Chatbot history.

You can change the displayed text for each example by using the `example_labels` argument. You can add icons to each example as well using the `example_icons` argument. Both of these arguments take a list of strings, which should be the same length as the `examples` list.

If you'd like to cache the examples so that they are pre-computed and the results appear instantly, set `cache_examples=True`.

**Customizing the chatbot or textbox component**

If you want to customize the `gr.Chatbot` or `gr.Textbox` that compose the `ChatInterface`, then you can pass in your own chatbot or textbox components. Here's an example of how we to apply the parameters we've discussed in this section:

```python

import gradio as gr

def yes_man(message, history):

if message.endswith("?"):

return "Yes"

else:

|

Customizing the Chat UI

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

le of how we to apply the parameters we've discussed in this section:

```python

import gradio as gr

def yes_man(message, history):

if message.endswith("?"):

return "Yes"

else:

return "Ask me anything!"

gr.ChatInterface(

yes_man,

chatbot=gr.Chatbot(height=300),

textbox=gr.Textbox(placeholder="Ask me a yes or no question", container=False, scale=7),

title="Yes Man",

description="Ask Yes Man any question",

examples=["Hello", "Am I cool?", "Are tomatoes vegetables?"],

cache_examples=True,

).launch(theme="ocean")

```

Here's another example that adds a "placeholder" for your chat interface, which appears before the user has started chatting. The `placeholder` argument of `gr.Chatbot` accepts Markdown or HTML:

```python

gr.ChatInterface(

yes_man,

chatbot=gr.Chatbot(placeholder="<strong>Your Personal Yes-Man</strong><br>Ask Me Anything"),

...

```

The placeholder appears vertically and horizontally centered in the chatbot.

|

Customizing the Chat UI

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

You may want to add multimodal capabilities to your chat interface. For example, you may want users to be able to upload images or files to your chatbot and ask questions about them. You can make your chatbot "multimodal" by passing in a single parameter (`multimodal=True`) to the `gr.ChatInterface` class.

When `multimodal=True`, the signature of your chat function changes slightly: the first parameter of your function (what we referred to as `message` above) should accept a dictionary consisting of the submitted text and uploaded files that looks like this:

```py

{

"text": "user input",

"files": [

"updated_file_1_path.ext",

"updated_file_2_path.ext",

...

]

}

```

This second parameter of your chat function, `history`, will be in the same openai-style dictionary format as before. However, if the history contains uploaded files, the `content` key will be a dictionary with a "type" key whose value is "file" and the file will be represented as a dictionary. All the files will be grouped in message in the history. So after uploading two files and asking a question, your history might look like this:

```python

[

{"role": "user", "content": [{"type": "file", "file": {"path": "cat1.png"}},

{"type": "file", "file": {"path": "cat1.png"}},

{"type": "text", "text": "What's the difference between these two images?"}]}

]

```

The return type of your chat function does *not change* when setting `multimodal=True` (i.e. in the simplest case, you should still return a string value). We discuss more complex cases, e.g. returning files [below](returning-complex-responses).

If you are customizing a multimodal chat interface, you should pass in an instance of `gr.MultimodalTextbox` to the `textbox` parameter. You can customize the `MultimodalTextbox` further by passing in the `sources` parameter, which is a list of sources to enable. Here's an example that illustrates how to

|

Multimodal Chat Interface

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

ox` to the `textbox` parameter. You can customize the `MultimodalTextbox` further by passing in the `sources` parameter, which is a list of sources to enable. Here's an example that illustrates how to set up and customize and multimodal chat interface:

```python

import gradio as gr

def count_images(message, history):

num_images = len(message["files"])

total_images = 0

for message in history:

for content in message["content"]:

if content["type"] == "file":

total_images += 1

return f"You just uploaded {num_images} images, total uploaded: {total_images+num_images}"

demo = gr.ChatInterface(

fn=count_images,

examples=[

{"text": "No files", "files": []}

],

multimodal=True,

textbox=gr.MultimodalTextbox(file_count="multiple", file_types=["image"], sources=["upload", "microphone"])

)

demo.launch()

```

|

Multimodal Chat Interface

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

You may want to add additional inputs to your chat function and expose them to your users through the chat UI. For example, you could add a textbox for a system prompt, or a slider that sets the number of tokens in the chatbot's response. The `gr.ChatInterface` class supports an `additional_inputs` parameter which can be used to add additional input components.

The `additional_inputs` parameters accepts a component or a list of components. You can pass the component instances directly, or use their string shortcuts (e.g. `"textbox"` instead of `gr.Textbox()`). If you pass in component instances, and they have _not_ already been rendered, then the components will appear underneath the chatbot within a `gr.Accordion()`.

Here's a complete example:

$code_chatinterface_system_prompt

If the components you pass into the `additional_inputs` have already been rendered in a parent `gr.Blocks()`, then they will _not_ be re-rendered in the accordion. This provides flexibility in deciding where to lay out the input components. In the example below, we position the `gr.Textbox()` on top of the Chatbot UI, while keeping the slider underneath.

```python

import gradio as gr

import time

def echo(message, history, system_prompt, tokens):

response = f"System prompt: {system_prompt}\n Message: {message}."

for i in range(min(len(response), int(tokens))):

time.sleep(0.05)

yield response[: i+1]

with gr.Blocks() as demo:

system_prompt = gr.Textbox("You are helpful AI.", label="System Prompt")

slider = gr.Slider(10, 100, render=False)

gr.ChatInterface(

echo, additional_inputs=[system_prompt, slider],

)

demo.launch()

```

**Examples with additional inputs**

You can also add example values for your additional inputs. Pass in a list of lists to the `examples` parameter, where each inner list represents one sample, and each inner list should be `1 + len(additional_inputs)` long. The first element in the inner list should be the example v

|

Additional Inputs

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

s to the `examples` parameter, where each inner list represents one sample, and each inner list should be `1 + len(additional_inputs)` long. The first element in the inner list should be the example value for the chat message, and each subsequent element should be an example value for one of the additional inputs, in order. When additional inputs are provided, examples are rendered in a table underneath the chat interface.

If you need to create something even more custom, then its best to construct the chatbot UI using the low-level `gr.Blocks()` API. We have [a dedicated guide for that here](/guides/creating-a-custom-chatbot-with-blocks).

|

Additional Inputs

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

In the same way that you can accept additional inputs into your chat function, you can also return additional outputs. Simply pass in a list of components to the `additional_outputs` parameter in `gr.ChatInterface` and return additional values for each component from your chat function. Here's an example that extracts code and outputs it into a separate `gr.Code` component:

$code_chatinterface_artifacts

**Note:** unlike the case of additional inputs, the components passed in `additional_outputs` must be already defined in your `gr.Blocks` context -- they are not rendered automatically. If you need to render them after your `gr.ChatInterface`, you can set `render=False` when they are first defined and then `.render()` them in the appropriate section of your `gr.Blocks()` as we do in the example above.

|

Additional Outputs

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

We mentioned earlier that in the simplest case, your chat function should return a `str` response, which will be rendered as Markdown in the chatbot. However, you can also return more complex responses as we discuss below:

**Returning files or Gradio components**

Currently, the following Gradio components can be displayed inside the chat interface:

* `gr.Image`

* `gr.Plot`

* `gr.Audio`

* `gr.HTML`

* `gr.Video`

* `gr.Gallery`

* `gr.File`

Simply return one of these components from your function to use it with `gr.ChatInterface`. Here's an example that returns an audio file:

```py

import gradio as gr

def music(message, history):

if message.strip():

return gr.Audio("https://github.com/gradio-app/gradio/raw/main/test/test_files/audio_sample.wav")

else:

return "Please provide the name of an artist"

gr.ChatInterface(

music,

textbox=gr.Textbox(placeholder="Which artist's music do you want to listen to?", scale=7),

).launch()

```

Similarly, you could return image files with `gr.Image`, video files with `gr.Video`, or arbitrary files with the `gr.File` component.

**Returning Multiple Messages**

You can return multiple assistant messages from your chat function simply by returning a `list` of messages, each of which is a valid chat type. This lets you, for example, send a message along with files, as in the following example:

$code_chatinterface_echo_multimodal

**Displaying intermediate thoughts or tool usage**

The `gr.ChatInterface` class supports displaying intermediate thoughts or tool usage direct in the chatbot.

To do this, you will need to return a `gr.ChatMessage` object from your chat function. Here is the schema of the `gr.ChatMessage` data class as well as two internal typed dictionaries:

```py

MessageContent = Union[str, FileDataDict, FileData, Component]

@dataclass

class ChatMessage:

content: Me

|

Returning Complex Responses

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

ma of the `gr.ChatMessage` data class as well as two internal typed dictionaries:

```py

MessageContent = Union[str, FileDataDict, FileData, Component]

@dataclass

class ChatMessage:

content: MessageContent | list[MessageContent]

metadata: MetadataDict = None

options: list[OptionDict] = None

class MetadataDict(TypedDict):

title: NotRequired[str]

id: NotRequired[int | str]

parent_id: NotRequired[int | str]

log: NotRequired[str]

duration: NotRequired[float]

status: NotRequired[Literal["pending", "done"]]

class OptionDict(TypedDict):

label: NotRequired[str]

value: str

```

As you can see, the `gr.ChatMessage` dataclass is similar to the openai-style message format, e.g. it has a "content" key that refers to the chat message content. But it also includes a "metadata" key whose value is a dictionary. If this dictionary includes a "title" key, the resulting message is displayed as an intermediate thought with the title being displayed on top of the thought. Here's an example showing the usage:

$code_chatinterface_thoughts

You can even show nested thoughts, which is useful for agent demos in which one tool may call other tools. To display nested thoughts, include "id" and "parent_id" keys in the "metadata" dictionary. Read our [dedicated guide on displaying intermediate thoughts and tool usage](/guides/agents-and-tool-usage) for more realistic examples.

**Providing preset responses**

When returning an assistant message, you may want to provide preset options that a user can choose in response. To do this, again, you will again return a `gr.ChatMessage` instance from your chat function. This time, make sure to set the `options` key specifying the preset responses.

As shown in the schema for `gr.ChatMessage` above, the value corresponding to the `options` key should be a list of dictionaries, each with a `value` (a string that is the value that should be sent to the chat function when this response is clicked) and an opt

|

Returning Complex Responses

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

corresponding to the `options` key should be a list of dictionaries, each with a `value` (a string that is the value that should be sent to the chat function when this response is clicked) and an optional `label` (if provided, is the text displayed as the preset response instead of the `value`).

This example illustrates how to use preset responses:

$code_chatinterface_options

|

Returning Complex Responses

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

You may wish to modify the value of the chatbot with your own events, other than those prebuilt in the `gr.ChatInterface`. For example, you could create a dropdown that prefills the chat history with certain conversations or add a separate button to clear the conversation history. The `gr.ChatInterface` supports these events, but you need to use the `gr.ChatInterface.chatbot_value` as the input or output component in such events. In this example, we use a `gr.Radio` component to prefill the the chatbot with certain conversations:

$code_chatinterface_prefill

|

Modifying the Chatbot Value Directly

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

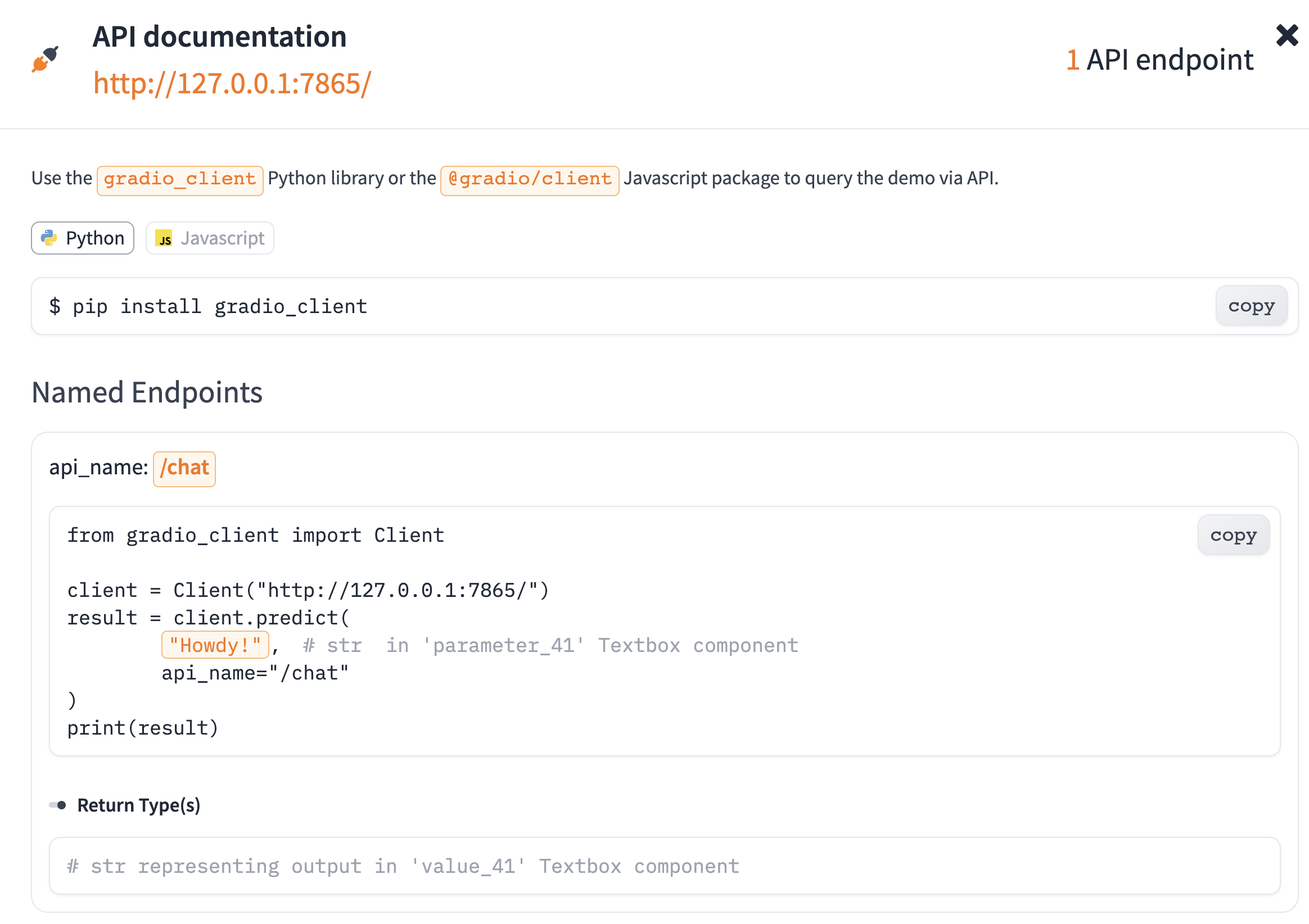

Once you've built your Gradio chat interface and are hosting it on [Hugging Face Spaces](https://hf.space) or somewhere else, then you can query it with a simple API. The API route will be the name of the function you pass to the ChatInterface. So if `gr.ChatInterface(respond)`, then the API route is `/respond`. The endpoint just expects the user's message and will return the response, internally keeping track of the message history.

To use the endpoint, you should use either the [Gradio Python Client](/guides/getting-started-with-the-python-client) or the [Gradio JS client](/guides/getting-started-with-the-js-client). Or, you can deploy your Chat Interface to other platforms, such as a:

* Slack bot [[tutorial]](../guides/creating-a-slack-bot-from-a-gradio-app)

* Website widget [[tutorial]](../guides/creating-a-website-widget-from-a-gradio-chatbot)

|

Using Your Chatbot via API

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

You can enable persistent chat history for your ChatInterface, allowing users to maintain multiple conversations and easily switch between them. When enabled, conversations are stored locally and privately in the user's browser using local storage. So if you deploy a ChatInterface e.g. on [Hugging Face Spaces](https://hf.space), each user will have their own separate chat history that won't interfere with other users' conversations. This means multiple users can interact with the same ChatInterface simultaneously while maintaining their own private conversation histories.

To enable this feature, simply set `gr.ChatInterface(save_history=True)` (as shown in the example in the next section). Users will then see their previous conversations in a side panel and can continue any previous chat or start a new one.

|

Chat History

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

To gather feedback on your chat model, set `gr.ChatInterface(flagging_mode="manual")` and users will be able to thumbs-up or thumbs-down assistant responses. Each flagged response, along with the entire chat history, will get saved in a CSV file in the app working directory (this can be configured via the `flagging_dir` parameter).

You can also change the feedback options via `flagging_options` parameter. The default options are "Like" and "Dislike", which appear as the thumbs-up and thumbs-down icons. Any other options appear under a dedicated flag icon. This example shows a ChatInterface that has both chat history (mentioned in the previous section) and user feedback enabled:

$code_chatinterface_streaming_echo

Note that in this example, we set several flagging options: "Like", "Spam", "Inappropriate", "Other". Because the case-sensitive string "Like" is one of the flagging options, the user will see a thumbs-up icon next to each assistant message. The three other flagging options will appear in a dropdown under the flag icon.

|

Collecting User Feedback

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

Now that you've learned about the `gr.ChatInterface` class and how it can be used to create chatbot UIs quickly, we recommend reading one of the following:

* [Our next Guide](../guides/chatinterface-examples) shows examples of how to use `gr.ChatInterface` with popular LLM libraries.

* If you'd like to build very custom chat applications from scratch, you can build them using the low-level Blocks API, as [discussed in this Guide](../guides/creating-a-custom-chatbot-with-blocks).

* Once you've deployed your Gradio Chat Interface, its easy to use in other applications because of the built-in API. Here's a tutorial on [how to deploy a Gradio chat interface as a Discord bot](../guides/creating-a-discord-bot-from-a-gradio-app).

|

What's Next?

|

https://gradio.app/guides/creating-a-chatbot-fast

|

Chatbots - Creating A Chatbot Fast Guide

|

First, we'll build the UI without handling these events and build from there.

We'll use the Hugging Face InferenceClient in order to get started without setting up

any API keys.

This is what the first draft of our application looks like:

```python

from huggingface_hub import InferenceClient

import gradio as gr

client = InferenceClient()

def respond(

prompt: str,

history,

):

if not history:

history = [{"role": "system", "content": "You are a friendly chatbot"}]

history.append({"role": "user", "content": prompt})

yield history

response = {"role": "assistant", "content": ""}

for message in client.chat_completion( type: ignore

history,

temperature=0.95,

top_p=0.9,

max_tokens=512,

stream=True,

model="openai/gpt-oss-20b"

):

response["content"] += message.choices[0].delta.content or "" if message.choices else ""

yield history + [response]

with gr.Blocks() as demo:

gr.Markdown("Chat with GPT-OSS 20b 🤗")

chatbot = gr.Chatbot(

label="Agent",

avatar_images=(

None,

"https://em-content.zobj.net/source/twitter/376/hugging-face_1f917.png",

),

)

prompt = gr.Textbox(max_lines=1, label="Chat Message")

prompt.submit(respond, [prompt, chatbot], [chatbot])

prompt.submit(lambda: "", None, [prompt])

if __name__ == "__main__":

demo.launch()

```

|

The UI

|

https://gradio.app/guides/chatbot-specific-events

|

Chatbots - Chatbot Specific Events Guide

|

Our undo event will populate the textbox with the previous user message and also remove all subsequent assistant responses.

In order to know the index of the last user message, we can pass `gr.UndoData` to our event handler function like so:

```python

def handle_undo(history, undo_data: gr.UndoData):

return history[:undo_data.index], history[undo_data.index]['content'][0]["text"]

```

We then pass this function to the `undo` event!

```python

chatbot.undo(handle_undo, chatbot, [chatbot, prompt])

```

You'll notice that every bot response will now have an "undo icon" you can use to undo the response -

Tip: You can also access the content of the user message with `undo_data.value`

|

The Undo Event

|

https://gradio.app/guides/chatbot-specific-events

|

Chatbots - Chatbot Specific Events Guide

|

The retry event will work similarly. We'll use `gr.RetryData` to get the index of the previous user message and remove all the subsequent messages from the history. Then we'll use the `respond` function to generate a new response. We could also get the previous prompt via the `value` property of `gr.RetryData`.

```python

def handle_retry(history, retry_data: gr.RetryData):

new_history = history[:retry_data.index]

previous_prompt = history[retry_data.index]['content'][0]["text"]

yield from respond(previous_prompt, new_history)

...

chatbot.retry(handle_retry, chatbot, chatbot)

```

You'll see that the bot messages have a "retry" icon now -

Tip: The Hugging Face inference API caches responses, so in this demo, the retry button will not generate a new response.

|

The Retry Event

|

https://gradio.app/guides/chatbot-specific-events

|

Chatbots - Chatbot Specific Events Guide

|

By now you should hopefully be seeing the pattern!

To let users like a message, we'll add a `.like` event to our chatbot.

We'll pass it a function that accepts a `gr.LikeData` object.

In this case, we'll just print the message that was either liked or disliked.

```python

def handle_like(data: gr.LikeData):

if data.liked:

print("You upvoted this response: ", data.value)

else:

print("You downvoted this response: ", data.value)

chatbot.like(handle_like, None, None)

```

|

The Like Event

|

https://gradio.app/guides/chatbot-specific-events

|

Chatbots - Chatbot Specific Events Guide

|

Same idea with the edit listener! with `gr.Chatbot(editable=True)`, you can capture user edits. The `gr.EditData` object tells us the index of the message edited and the new text of the mssage. Below, we use this object to edit the history, and delete any subsequent messages.

```python

def handle_edit(history, edit_data: gr.EditData):

new_history = history[:edit_data.index]

new_history[-1]['content'] = [{"text": edit_data.value, "type": "text"}]

return new_history

...

chatbot.edit(handle_edit, chatbot, chatbot)

```

|

The Edit Event

|

https://gradio.app/guides/chatbot-specific-events

|

Chatbots - Chatbot Specific Events Guide

|

As a bonus, we'll also cover the `.clear()` event, which is triggered when the user clicks the clear icon to clear all messages. As a developer, you can attach additional events that should happen when this icon is clicked, e.g. to handle clearing of additional chatbot state:

```python

from uuid import uuid4

import gradio as gr

def clear():

print("Cleared uuid")

return uuid4()

def chat_fn(user_input, history, uuid):

return f"{user_input} with uuid {uuid}"

with gr.Blocks() as demo:

uuid_state = gr.State(

uuid4

)

chatbot = gr.Chatbot()

chatbot.clear(clear, outputs=[uuid_state])

gr.ChatInterface(

chat_fn,

additional_inputs=[uuid_state],

chatbot=chatbot,

)

demo.launch()

```

In this example, the `clear` function, bound to the `chatbot.clear` event, returns a new UUID into our session state, when the chat history is cleared via the trash icon. This can be seen in the `chat_fn` function, which references the UUID saved in our session state.

This example also shows that you can use these events with `gr.ChatInterface` by passing in a custom `gr.Chatbot` object.

|

The Clear Event

|

https://gradio.app/guides/chatbot-specific-events

|

Chatbots - Chatbot Specific Events Guide

|

That's it! You now know how you can implement the retry, undo, like, and clear events for the Chatbot.

|

Conclusion

|

https://gradio.app/guides/chatbot-specific-events

|

Chatbots - Chatbot Specific Events Guide

|

3D models are becoming more popular in machine learning and make for some of the most fun demos to experiment with. Using `gradio`, you can easily build a demo of your 3D image model and share it with anyone. The Gradio 3D Model component accepts 3 file types including: _.obj_, _.glb_, & _.gltf_.

This guide will show you how to build a demo for your 3D image model in a few lines of code; like the one below. Play around with 3D object by clicking around, dragging and zooming:

<gradio-app space="gradio/Model3D"> </gradio-app>

Prerequisites

Make sure you have the `gradio` Python package already [installed](https://gradio.app/guides/quickstart).

|

Introduction

|

https://gradio.app/guides/how-to-use-3D-model-component

|

Other Tutorials - How To Use 3D Model Component Guide

|

Let's take a look at how to create the minimal interface above. The prediction function in this case will just return the original 3D model mesh, but you can change this function to run inference on your machine learning model. We'll take a look at more complex examples below.

```python

import gradio as gr

import os

def load_mesh(mesh_file_name):

return mesh_file_name

demo = gr.Interface(

fn=load_mesh,

inputs=gr.Model3D(),

outputs=gr.Model3D(

clear_color=[0.0, 0.0, 0.0, 0.0], label="3D Model"),

examples=[

[os.path.join(os.path.dirname(__file__), "files/Bunny.obj")],

[os.path.join(os.path.dirname(__file__), "files/Duck.glb")],

[os.path.join(os.path.dirname(__file__), "files/Fox.gltf")],

[os.path.join(os.path.dirname(__file__), "files/face.obj")],

],

)

if __name__ == "__main__":

demo.launch()

```

Let's break down the code above:

`load_mesh`: This is our 'prediction' function and for simplicity, this function will take in the 3D model mesh and return it.

Creating the Interface:

- `fn`: the prediction function that is used when the user clicks submit. In our case this is the `load_mesh` function.

- `inputs`: create a model3D input component. The input expects an uploaded file as a {str} filepath.

- `outputs`: create a model3D output component. The output component also expects a file as a {str} filepath.

- `clear_color`: this is the background color of the 3D model canvas. Expects RGBa values.

- `label`: the label that appears on the top left of the component.

- `examples`: list of 3D model files. The 3D model component can accept _.obj_, _.glb_, & _.gltf_ file types.

- `cache_examples`: saves the predicted output for the examples, to save time on inference.

|

Taking a Look at the Code

|

https://gradio.app/guides/how-to-use-3D-model-component

|

Other Tutorials - How To Use 3D Model Component Guide

|

Below is a demo that uses the DPT model to predict the depth of an image and then uses 3D Point Cloud to create a 3D object. Take a look at the [app.py](https://huggingface.co/spaces/gradio/dpt-depth-estimation-3d-obj/blob/main/app.py) file for a peek into the code and the model prediction function.

<gradio-app space="gradio/dpt-depth-estimation-3d-obj"> </gradio-app>

---

And you're done! That's all the code you need to build an interface for your Model3D model. Here are some references that you may find useful:

- Gradio's ["Getting Started" guide](https://gradio.app/getting_started/)

- The first [3D Model Demo](https://huggingface.co/spaces/gradio/Model3D) and [complete code](https://huggingface.co/spaces/gradio/Model3D/tree/main) (on Hugging Face Spaces)

|

Exploring a more complex Model3D Demo:

|

https://gradio.app/guides/how-to-use-3D-model-component

|

Other Tutorials - How To Use 3D Model Component Guide

|

Let's deploy a Gradio-style "Hello, world" app that lets a user input their name and then responds with a short greeting. We're not going to use this code as-is in our app, but it's useful to see what the initial Gradio version looks like.

```python

import gradio as gr

A simple Gradio interface for a greeting function

def greet(name):

return f"Hello {name}!"

demo = gr.Interface(fn=greet, inputs="text", outputs="text")

demo.launch()

```

To deploy this app on Modal you'll need to

- define your container image,

- wrap the Gradio app in a Modal Function,

- and deploy it using Modal's CLI!

Prerequisite: Install and set up Modal

Before you get started, you'll need to create a Modal account if you don't already have one. Then you can set up your environment by authenticating with those account credentials.

- Sign up at [modal.com](https://www.modal.com?utm_source=partner&utm_medium=github&utm_campaign=livekit).

- Install the Modal client in your local development environment.

```bash

pip install modal

```

- Authenticate your account.

```

modal setup

```

Great, now we can start building our app!

Step 1: Define our `modal.Image`

To start, let's make a new file named `gradio_app.py`, import `modal`, and define our image. Modal `Images` are defined by sequentially calling methods on our `Image` instance.

For this simple app, we'll

- start with the `debian_slim` image,

- choose a Python version (3.12),

- and install the dependencies - only `fastapi` and `gradio`.

```python

import modal

app = modal.App("gradio-app")

web_image = modal.Image.debian_slim(python_version="3.12").uv_pip_install(

"fastapi[standard]",

"gradio",

)

```

Note, that you don't need to install `gradio` or `fastapi` in your local environement - only `modal` is required locally.

Step 2: Wrap the Gradio app in a Modal-deployed FastAPI app

Like many Gradio apps, the example above is run by calling `launch()` on our demo at the end of the script. However, Modal doesn't ru

|

Deploying a simple Gradio app on Modal

|

https://gradio.app/guides/deploying-gradio-with-modal

|

Other Tutorials - Deploying Gradio With Modal Guide

|

.

Step 2: Wrap the Gradio app in a Modal-deployed FastAPI app

Like many Gradio apps, the example above is run by calling `launch()` on our demo at the end of the script. However, Modal doesn't run scripts, it runs functions - serverless functions to be exact.

To get Modal to serve our `demo`, we can leverage Gradio and Modal's support for `fastapi` apps. We do this with the `@modal.asgi_app()` function decorator which deploys the web app returned by the function. And we use the `mount_gradio_app` function to add our Gradio `demo` as a route in the web app.

```python

with web_image.imports():

import gradio as gr

from gradio.routes import mount_gradio_app

from fastapi import FastAPI

@app.function(

image=web_image,

max_containers = 1, we'll come to this later

)

@modal.concurrent(max_inputs=100) allow multiple users at one time

@modal.asgi_app()

def ui():

"""A simple Gradio interface for a greeting function."""

def greet(name):

return f"Hello {name}!"

demo = gr.Interface(fn=greet, inputs="text", outputs="text")

return mount_gradio_app(app=FastAPI(), blocks=demo, path="/")

```

Let's quickly review what's going on here:

- We use the `Image.imports` context manager to define our imports. These will be available when your function runs in the cloud.

- We move our code inside a Python function, `ui`, and decorate it with `@app.function` which wraps it as a Modal serverless Function. We provide the image and other parameters (we'll cover this later) as inputs to the decorator.

- We add the `@modal.concurrent` decorator which allows multiple requests per container to be processed at the same time.

- We add the `@modal.asgi_app` decorator which tells Modal that this particular function is serving an ASGI app (here a `fastapi` app). To use this decorator, your ASGI app needs to be the return value from the function.

Step 3: Deploying on Modal

To deploy the app, just run the following command:

```bash

modal deploy <pat

|

Deploying a simple Gradio app on Modal

|

https://gradio.app/guides/deploying-gradio-with-modal

|

Other Tutorials - Deploying Gradio With Modal Guide

|

app). To use this decorator, your ASGI app needs to be the return value from the function.

Step 3: Deploying on Modal

To deploy the app, just run the following command:

```bash

modal deploy <path-to-file>

```

The first time you run your app, Modal will build and cache the image which, takes about 30 seconds. As long as you don't change the image, subsequent deployments will only take a few seconds.

After the image builds Modal will print the URL to your webapp and to your Modal dashboard. The webapp URL should look something like `https://{workspace}-{environment}--gradio-app-ui.modal.run`. Paste it into your web browser a try out your app!

|

Deploying a simple Gradio app on Modal

|

https://gradio.app/guides/deploying-gradio-with-modal

|

Other Tutorials - Deploying Gradio With Modal Guide

|

Sticky Sessions

Modal Functions are serverless which means that each client request is considered independent. While this facilitates autoscaling, it can also mean that extra care should be taken if your application requires any sort of server-side statefulness.

Gradio relies on a REST API, which is itself stateless. But it does require sticky sessions, meaning that every request from a particular client must be routed to the same container. However, Modal does not make any guarantees in this regard.

A simple way to satisfy this constraint is to set `max_containers = 1` in the `@app.function` decorator and setting the `max_inputs` argument of `@modal.concurrent` to a fairly large number - as we did above. This means that Modal won't spin up more than one container to serve requests to your app which effectively satisfies the sticky session requirement.

Concurrency and Queues

Both Gradio and Modal have concepts of concurrency and queues, and getting the most of out of your compute resources requires understanding how these interact.

Modal queues client requests to each deployed Function and simultaneously executes requests up to the concurrency limit for that Function. If requests come in and the concurrency limit is already satisfied, Modal will spin up a new container - up to the maximum set for the Function. In our case, our Gradio app is represented by one Modal Function, so all requests share one queue and concurrency limit. Therefore Modal constrains the _total_ number of requests running at one time, regardless of what they are doing.

Gradio on the other hand, allows developers to utilize multiple queues each with its own concurrency limit. One or more event listeners can then be assigned to a queue which is useful to manage GPU resources for computationally expensive requests.

Thinking carefully about how these queues and limits interact can help you optimize your app's performance and resource optimization while avoiding unwanted results like

|

Important Considerations

|

https://gradio.app/guides/deploying-gradio-with-modal

|

Other Tutorials - Deploying Gradio With Modal Guide

|

tionally expensive requests.

Thinking carefully about how these queues and limits interact can help you optimize your app's performance and resource optimization while avoiding unwanted results like shared or lost state.

Creating a GPU Function

Another option to manage GPU utilization is to deploy your GPU computations in their own Modal Function and calling this remote Function from inside your Gradio app. This allows you to take full advantage of Modal's serverless autoscaling while routing all of the client HTTP requests to a single Gradio CPU container.

|

Important Considerations

|

https://gradio.app/guides/deploying-gradio-with-modal

|

Other Tutorials - Deploying Gradio With Modal Guide

|

In this guide we will demonstrate some of the ways you can use Gradio with Comet. We will cover the basics of using Comet with Gradio and show you some of the ways that you can leverage Gradio's advanced features such as [Embedding with iFrames](https://www.gradio.app/guides/sharing-your-app/embedding-with-iframes) and [State](https://www.gradio.app/docs/state) to build some amazing model evaluation workflows.

Here is a list of the topics covered in this guide.

1. Logging Gradio UI's to your Comet Experiments

2. Embedding Gradio Applications directly into your Comet Projects

3. Embedding Hugging Face Spaces directly into your Comet Projects

4. Logging Model Inferences from your Gradio Application to Comet

|

Introduction

|

https://gradio.app/guides/Gradio-and-Comet

|

Other Tutorials - Gradio And Comet Guide

|

[Comet](https://www.comet.com?utm_source=gradio&utm_medium=referral&utm_campaign=gradio-integration&utm_content=gradio-docs) is an MLOps Platform that is designed to help Data Scientists and Teams build better models faster! Comet provides tooling to Track, Explain, Manage, and Monitor your models in a single place! It works with Jupyter Notebooks and Scripts and most importantly it's 100% free!

|

What is Comet?

|

https://gradio.app/guides/Gradio-and-Comet

|

Other Tutorials - Gradio And Comet Guide

|

First, install the dependencies needed to run these examples

```shell

pip install comet_ml torch torchvision transformers gradio shap requests Pillow

```

Next, you will need to [sign up for a Comet Account](https://www.comet.com/signup?utm_source=gradio&utm_medium=referral&utm_campaign=gradio-integration&utm_content=gradio-docs). Once you have your account set up, [grab your API Key](https://www.comet.com/docs/v2/guides/getting-started/quickstart/get-an-api-key?utm_source=gradio&utm_medium=referral&utm_campaign=gradio-integration&utm_content=gradio-docs) and configure your Comet credentials

If you're running these examples as a script, you can either export your credentials as environment variables

```shell

export COMET_API_KEY="<Your API Key>"

export COMET_WORKSPACE="<Your Workspace Name>"

export COMET_PROJECT_NAME="<Your Project Name>"

```

or set them in a `.comet.config` file in your working directory. You file should be formatted in the following way.

```shell

[comet]

api_key=<Your API Key>

workspace=<Your Workspace Name>

project_name=<Your Project Name>

```

If you are using the provided Colab Notebooks to run these examples, please run the cell with the following snippet before starting the Gradio UI. Running this cell allows you to interactively add your API key to the notebook.

```python

import comet_ml

comet_ml.init()

```

|

Setup

|

https://gradio.app/guides/Gradio-and-Comet

|

Other Tutorials - Gradio And Comet Guide

|

[](https://colab.research.google.com/github/comet-ml/comet-examples/blob/master/integrations/model-evaluation/gradio/notebooks/Gradio_and_Comet.ipynb)

In this example, we will go over how to log your Gradio Applications to Comet and interact with them using the Gradio Custom Panel.

Let's start by building a simple Image Classification example using `resnet18`.

```python

import comet_ml

import requests

import torch

from PIL import Image

from torchvision import transforms

torch.hub.download_url_to_file("https://github.com/pytorch/hub/raw/master/images/dog.jpg", "dog.jpg")

if torch.cuda.is_available():

device = "cuda"

else:

device = "cpu"

model = torch.hub.load("pytorch/vision:v0.6.0", "resnet18", pretrained=True).eval()

model = model.to(device)

Download human-readable labels for ImageNet.

response = requests.get("https://git.io/JJkYN")

labels = response.text.split("\n")

def predict(inp):

inp = Image.fromarray(inp.astype("uint8"), "RGB")

inp = transforms.ToTensor()(inp).unsqueeze(0)

with torch.no_grad():

prediction = torch.nn.functional.softmax(model(inp.to(device))[0], dim=0)

return {labels[i]: float(prediction[i]) for i in range(1000)}

inputs = gr.Image()

outputs = gr.Label(num_top_classes=3)

io = gr.Interface(

fn=predict, inputs=inputs, outputs=outputs, examples=["dog.jpg"]

)

io.launch(inline=False, share=True)

experiment = comet_ml.Experiment()

experiment.add_tag("image-classifier")

io.integrate(comet_ml=experiment)

```

The last line in this snippet will log the URL of the Gradio Application to your Comet Experiment. You can find the URL in the Text Tab of your Experiment.

<video width="560" height="315" controls>

<source src="https://user-images.githubusercontent.com/7529846/214328034-09369d4d-8b94-4c4a-aa3c-25e3ed8394c4.mp4"></source>

</video>

Add the Gradio Panel to your Experiment to interact with your application.

<video width=

|

1. Logging Gradio UI's to your Comet Experiments

|

https://gradio.app/guides/Gradio-and-Comet

|

Other Tutorials - Gradio And Comet Guide

|

r-images.githubusercontent.com/7529846/214328034-09369d4d-8b94-4c4a-aa3c-25e3ed8394c4.mp4"></source>

</video>

Add the Gradio Panel to your Experiment to interact with your application.

<video width="560" height="315" controls>

<source src="https://user-images.githubusercontent.com/7529846/214328194-95987f83-c180-4929-9bed-c8a0d3563ed7.mp4"></source>

</video>

|

1. Logging Gradio UI's to your Comet Experiments

|

https://gradio.app/guides/Gradio-and-Comet

|

Other Tutorials - Gradio And Comet Guide

|

<iframe width="560" height="315" src="https://www.youtube.com/embed/KZnpH7msPq0?start=9" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share" allowfullscreen></iframe>

If you are permanently hosting your Gradio application, you can embed the UI using the Gradio Panel Extended custom Panel.

Go to your Comet Project page, and head over to the Panels tab. Click the `+ Add` button to bring up the Panels search page.

<img width="560" alt="adding-panels" src="https://user-images.githubusercontent.com/7529846/214329314-70a3ff3d-27fb-408c-a4d1-4b58892a3854.jpeg">

Next, search for Gradio Panel Extended in the Public Panels section and click `Add`.

<img width="560" alt="gradio-panel-extended" src="https://user-images.githubusercontent.com/7529846/214325577-43226119-0292-46be-a62a-0c7a80646ebb.png">

Once you have added your Panel, click `Edit` to access to the Panel Options page and paste in the URL of your Gradio application.

<img width="560" alt="Edit-Gradio-Panel-URL" src="https://user-images.githubusercontent.com/7529846/214334843-870fe726-0aa1-4b21-bbc6-0c48f56c48d8.png">

|

2. Embedding Gradio Applications directly into your Comet Projects

|

https://gradio.app/guides/Gradio-and-Comet

|

Other Tutorials - Gradio And Comet Guide

|

<iframe width="560" height="315" src="https://www.youtube.com/embed/KZnpH7msPq0?start=107" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share" allowfullscreen></iframe>

You can also embed Gradio Applications that are hosted on Hugging Faces Spaces into your Comet Projects using the Hugging Face Spaces Panel.

Go to your Comet Project page, and head over to the Panels tab. Click the `+ Add` button to bring up the Panels search page. Next, search for the Hugging Face Spaces Panel in the Public Panels section and click `Add`.

<img width="560" height="315" alt="huggingface-spaces-panel" src="https://user-images.githubusercontent.com/7529846/214325606-99aa3af3-b284-4026-b423-d3d238797e12.png">

Once you have added your Panel, click Edit to access to the Panel Options page and paste in the path of your Hugging Face Space e.g. `pytorch/ResNet`

<img width="560" height="315" alt="Edit-HF-Space" src="https://user-images.githubusercontent.com/7529846/214335868-c6f25dee-13db-4388-bcf5-65194f850b02.png">

|

3. Embedding Hugging Face Spaces directly into your Comet Projects

|

https://gradio.app/guides/Gradio-and-Comet

|

Other Tutorials - Gradio And Comet Guide

|

<iframe width="560" height="315" src="https://www.youtube.com/embed/KZnpH7msPq0?start=176" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share" allowfullscreen></iframe>

[](https://colab.research.google.com/github/comet-ml/comet-examples/blob/master/integrations/model-evaluation/gradio/notebooks/Logging_Model_Inferences_with_Comet_and_Gradio.ipynb)

In the previous examples, we demonstrated the various ways in which you can interact with a Gradio application through the Comet UI. Additionally, you can also log model inferences, such as SHAP plots, from your Gradio application to Comet.

In the following snippet, we're going to log inferences from a Text Generation model. We can persist an Experiment across multiple inference calls using Gradio's [State](https://www.gradio.app/docs/state) object. This will allow you to log multiple inferences from a model to a single Experiment.

```python

import comet_ml

import gradio as gr

import shap

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

if torch.cuda.is_available():

device = "cuda"

else:

device = "cpu"

MODEL_NAME = "gpt2"

model = AutoModelForCausalLM.from_pretrained(MODEL_NAME)

set model decoder to true

model.config.is_decoder = True

set text-generation params under task_specific_params

model.config.task_specific_params["text-generation"] = {

"do_sample": True,

"max_length": 50,

"temperature": 0.7,

"top_k": 50,

"no_repeat_ngram_size": 2,

}

model = model.to(device)

tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME)

explainer = shap.Explainer(model, tokenizer)

def start_experiment():

"""Returns an APIExperiment object that is thread safe

and can be used to log inferences to a single Experiment

"""

try:

api = comet_ml.API()

workspace = api.get_default_

|

4. Logging Model Inferences to Comet

|

https://gradio.app/guides/Gradio-and-Comet

|

Other Tutorials - Gradio And Comet Guide

|

"""Returns an APIExperiment object that is thread safe

and can be used to log inferences to a single Experiment

"""

try:

api = comet_ml.API()

workspace = api.get_default_workspace()

project_name = comet_ml.config.get_config()["comet.project_name"]

experiment = comet_ml.APIExperiment(

workspace=workspace, project_name=project_name

)

experiment.log_other("Created from", "gradio-inference")

message = f"Started Experiment: [{experiment.name}]({experiment.url})"

return (experiment, message)

except Exception as e:

return None, None

def predict(text, state, message):

experiment = state

shap_values = explainer([text])

plot = shap.plots.text(shap_values, display=False)

if experiment is not None:

experiment.log_other("message", message)

experiment.log_html(plot)

return plot

with gr.Blocks() as demo:

start_experiment_btn = gr.Button("Start New Experiment")

experiment_status = gr.Markdown()

Log a message to the Experiment to provide more context

experiment_message = gr.Textbox(label="Experiment Message")

experiment = gr.State()

input_text = gr.Textbox(label="Input Text", lines=5, interactive=True)

submit_btn = gr.Button("Submit")

output = gr.HTML(interactive=True)

start_experiment_btn.click(

start_experiment, outputs=[experiment, experiment_status]

)

submit_btn.click(

predict, inputs=[input_text, experiment, experiment_message], outputs=[output]

)

```

Inferences from this snippet will be saved in the HTML tab of your experiment.

<video width="560" height="315" controls>

<source src="https://user-images.githubusercontent.com/7529846/214328610-466e5c81-4814-49b9-887c-065aca14dd30.mp4"></source>

</video>

|

4. Logging Model Inferences to Comet

|

https://gradio.app/guides/Gradio-and-Comet

|

Other Tutorials - Gradio And Comet Guide

|

887c-065aca14dd30.mp4"></source>

</video>

|

4. Logging Model Inferences to Comet

|

https://gradio.app/guides/Gradio-and-Comet

|

Other Tutorials - Gradio And Comet Guide

|

We hope you found this guide useful and that it provides some inspiration to help you build awesome model evaluation workflows with Comet and Gradio.

|

Conclusion

|

https://gradio.app/guides/Gradio-and-Comet

|

Other Tutorials - Gradio And Comet Guide

|

- Create an account on Hugging Face [here](https://huggingface.co/join).

- Add Gradio Demo under your username, see this [course](https://huggingface.co/course/chapter9/4?fw=pt) for setting up Gradio Demo on Hugging Face.

- Request to join the Comet organization [here](https://huggingface.co/Comet).

|

How to contribute Gradio demos on HF spaces on the Comet organization

|

https://gradio.app/guides/Gradio-and-Comet

|

Other Tutorials - Gradio And Comet Guide

|

- [Comet Documentation](https://www.comet.com/docs/v2/?utm_source=gradio&utm_medium=referral&utm_campaign=gradio-integration&utm_content=gradio-docs)

|

Additional Resources

|

https://gradio.app/guides/Gradio-and-Comet

|

Other Tutorials - Gradio And Comet Guide

|

Image classification is a central task in computer vision. Building better classifiers to classify what object is present in a picture is an active area of research, as it has applications stretching from facial recognition to manufacturing quality control.

State-of-the-art image classifiers are based on the _transformers_ architectures, originally popularized for NLP tasks. Such architectures are typically called vision transformers (ViT). Such models are perfect to use with Gradio's _image_ input component, so in this tutorial we will build a web demo to classify images using Gradio. We will be able to build the whole web application in a **single line of Python**, and it will look like the demo on the bottom of the page.

Let's get started!

Prerequisites

Make sure you have the `gradio` Python package already [installed](/getting_started).

|

Introduction

|

https://gradio.app/guides/image-classification-with-vision-transformers

|

Other Tutorials - Image Classification With Vision Transformers Guide

|

First, we will need an image classification model. For this tutorial, we will use a model from the [Hugging Face Model Hub](https://huggingface.co/models?pipeline_tag=image-classification). The Hub contains thousands of models covering dozens of different machine learning tasks.

Expand the Tasks category on the left sidebar and select "Image Classification" as our task of interest. You will then see all of the models on the Hub that are designed to classify images.

At the time of writing, the most popular one is `google/vit-base-patch16-224`, which has been trained on ImageNet images at a resolution of 224x224 pixels. We will use this model for our demo.

|

Step 1 — Choosing a Vision Image Classification Model

|

https://gradio.app/guides/image-classification-with-vision-transformers

|

Other Tutorials - Image Classification With Vision Transformers Guide

|

When using a model from the Hugging Face Hub, we do not need to define the input or output components for the demo. Similarly, we do not need to be concerned with the details of preprocessing or postprocessing.

All of these are automatically inferred from the model tags.

Besides the import statement, it only takes a single line of Python to load and launch the demo.

We use the `gr.Interface.load()` method and pass in the path to the model including the `huggingface/` to designate that it is from the Hugging Face Hub.

```python

import gradio as gr

gr.Interface.load(

"huggingface/google/vit-base-patch16-224",

examples=["alligator.jpg", "laptop.jpg"]).launch()

```

Notice that we have added one more parameter, the `examples`, which allows us to prepopulate our interfaces with a few predefined examples.

This produces the following interface, which you can try right here in your browser. When you input an image, it is automatically preprocessed and sent to the Hugging Face Hub API, where it is passed through the model and returned as a human-interpretable prediction. Try uploading your own image!

<gradio-app space="gradio/vision-transformer">

---

And you're done! In one line of code, you have built a web demo for an image classifier. If you'd like to share with others, try setting `share=True` when you `launch()` the Interface!

|

Step 2 — Loading the Vision Transformer Model with Gradio

|

https://gradio.app/guides/image-classification-with-vision-transformers

|

Other Tutorials - Image Classification With Vision Transformers Guide

|

In this Guide, we'll walk you through:

- Introduction of Gradio, and Hugging Face Spaces, and Wandb

- How to setup a Gradio demo using the Wandb integration for JoJoGAN

- How to contribute your own Gradio demos after tracking your experiments on wandb to the Wandb organization on Hugging Face

|

Introduction

|

https://gradio.app/guides/Gradio-and-Wandb-Integration

|

Other Tutorials - Gradio And Wandb Integration Guide

|

Weights and Biases (W&B) allows data scientists and machine learning scientists to track their machine learning experiments at every stage, from training to production. Any metric can be aggregated over samples and shown in panels in a customizable and searchable dashboard, like below:

<img alt="Screen Shot 2022-08-01 at 5 54 59 PM" src="https://user-images.githubusercontent.com/81195143/182252755-4a0e1ca8-fd25-40ff-8c91-c9da38aaa9ec.png">

|

What is Wandb?

|

https://gradio.app/guides/Gradio-and-Wandb-Integration

|

Other Tutorials - Gradio And Wandb Integration Guide

|

Gradio

Gradio lets users demo their machine learning models as a web app, all in a few lines of Python. Gradio wraps any Python function (such as a machine learning model's inference function) into a user interface and the demos can be launched inside jupyter notebooks, colab notebooks, as well as embedded in your own website and hosted on Hugging Face Spaces for free.

Get started [here](https://gradio.app/getting_started)

Hugging Face Spaces

Hugging Face Spaces is a free hosting option for Gradio demos. Spaces comes with 3 SDK options: Gradio, Streamlit and Static HTML demos. Spaces can be public or private and the workflow is similar to github repos. There are over 2000+ spaces currently on Hugging Face. Learn more about spaces [here](https://huggingface.co/spaces/launch).

|

What are Hugging Face Spaces & Gradio?

|

https://gradio.app/guides/Gradio-and-Wandb-Integration

|

Other Tutorials - Gradio And Wandb Integration Guide

|

Now, let's walk you through how to do this on your own. We'll make the assumption that you're new to W&B and Gradio for the purposes of this tutorial.

Let's get started!

1. Create a W&B account

Follow [these quick instructions](https://app.wandb.ai/login) to create your free account if you don’t have one already. It shouldn't take more than a couple minutes. Once you're done (or if you've already got an account), next, we'll run a quick colab.

2. Open Colab Install Gradio and W&B

We'll be following along with the colab provided in the JoJoGAN repo with some minor modifications to use Wandb and Gradio more effectively.

[](https://colab.research.google.com/github/mchong6/JoJoGAN/blob/main/stylize.ipynb)

Install Gradio and Wandb at the top:

```sh

pip install gradio wandb

```

3. Finetune StyleGAN and W&B experiment tracking

This next step will open a W&B dashboard to track your experiments and a gradio panel showing pretrained models to choose from a drop down menu from a Gradio Demo hosted on Huggingface Spaces. Here's the code you need for that:

```python

alpha = 1.0

alpha = 1-alpha

preserve_color = True

num_iter = 100

log_interval = 50

samples = []

column_names = ["Reference (y)", "Style Code(w)", "Real Face Image(x)"]

wandb.init(project="JoJoGAN")

config = wandb.config

config.num_iter = num_iter

config.preserve_color = preserve_color

wandb.log(

{"Style reference": [wandb.Image(transforms.ToPILImage()(target_im))]},

step=0)

load discriminator for perceptual loss

discriminator = Discriminator(1024, 2).eval().to(device)

ckpt = torch.load('models/stylegan2-ffhq-config-f.pt', map_location=lambda storage, loc: storage)

discriminator.load_state_dict(ckpt["d"], strict=False)

reset generator

del generator

generator = deepcopy(original_generator)

g_optim = optim.Adam(generator.parameters(),

|

Setting up a Gradio Demo for JoJoGAN

|

https://gradio.app/guides/Gradio-and-Wandb-Integration

|

Other Tutorials - Gradio And Wandb Integration Guide

|

: storage)

discriminator.load_state_dict(ckpt["d"], strict=False)

reset generator

del generator

generator = deepcopy(original_generator)

g_optim = optim.Adam(generator.parameters(), lr=2e-3, betas=(0, 0.99))

Which layers to swap for generating a family of plausible real images -> fake image

if preserve_color:

id_swap = [9,11,15,16,17]

else:

id_swap = list(range(7, generator.n_latent))

for idx in tqdm(range(num_iter)):

mean_w = generator.get_latent(torch.randn([latents.size(0), latent_dim]).to(device)).unsqueeze(1).repeat(1, generator.n_latent, 1)

in_latent = latents.clone()

in_latent[:, id_swap] = alpha*latents[:, id_swap] + (1-alpha)*mean_w[:, id_swap]

img = generator(in_latent, input_is_latent=True)

with torch.no_grad():

real_feat = discriminator(targets)

fake_feat = discriminator(img)

loss = sum([F.l1_loss(a, b) for a, b in zip(fake_feat, real_feat)])/len(fake_feat)

wandb.log({"loss": loss}, step=idx)

if idx % log_interval == 0:

generator.eval()

my_sample = generator(my_w, input_is_latent=True)

generator.train()

my_sample = transforms.ToPILImage()(utils.make_grid(my_sample, normalize=True, range=(-1, 1)))

wandb.log(

{"Current stylization": [wandb.Image(my_sample)]},

step=idx)

table_data = [

wandb.Image(transforms.ToPILImage()(target_im)),

wandb.Image(img),

wandb.Image(my_sample),

]

samples.append(table_data)

g_optim.zero_grad()

loss.backward()

g_optim.step()

out_table = wandb.Table(data=samples, columns=column_names)

wandb.log({"Current Samples": out_table})

```

4. Save, Download, and Load Model

Here's how to save and download your model.

```python

from PIL import Image

import torch

torch.backends.cudnn.benchmark = True

from torchvision impor

|

Setting up a Gradio Demo for JoJoGAN

|

https://gradio.app/guides/Gradio-and-Wandb-Integration

|

Other Tutorials - Gradio And Wandb Integration Guide

|

ave, Download, and Load Model

Here's how to save and download your model.

```python

from PIL import Image

import torch

torch.backends.cudnn.benchmark = True

from torchvision import transforms, utils

from util import *

import math

import random

import numpy as np

from torch import nn, autograd, optim

from torch.nn import functional as F

from tqdm import tqdm

import lpips

from model import *

from e4e_projection import projection as e4e_projection

from copy import deepcopy

import imageio

import os

import sys

import torchvision.transforms as transforms

from argparse import Namespace

from e4e.models.psp import pSp

from util import *

from huggingface_hub import hf_hub_download

from google.colab import files

torch.save({"g": generator.state_dict()}, "your-model-name.pt")

files.download('your-model-name.pt')

latent_dim = 512

device="cuda"

model_path_s = hf_hub_download(repo_id="akhaliq/jojogan-stylegan2-ffhq-config-f", filename="stylegan2-ffhq-config-f.pt")

original_generator = Generator(1024, latent_dim, 8, 2).to(device)

ckpt = torch.load(model_path_s, map_location=lambda storage, loc: storage)

original_generator.load_state_dict(ckpt["g_ema"], strict=False)

mean_latent = original_generator.mean_latent(10000)

generator = deepcopy(original_generator)

ckpt = torch.load("/content/JoJoGAN/your-model-name.pt", map_location=lambda storage, loc: storage)

generator.load_state_dict(ckpt["g"], strict=False)

generator.eval()

plt.rcParams['figure.dpi'] = 150

transform = transforms.Compose(

[

transforms.Resize((1024, 1024)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)),

]

)

def inference(img):

img.save('out.jpg')

aligned_face = align_face('out.jpg')

my_w = e4e_projection(aligned_face, "out.pt", device).unsqueeze(0)

|

Setting up a Gradio Demo for JoJoGAN

|

https://gradio.app/guides/Gradio-and-Wandb-Integration

|

Other Tutorials - Gradio And Wandb Integration Guide

|

.5, 0.5)),

]

)

def inference(img):

img.save('out.jpg')

aligned_face = align_face('out.jpg')

my_w = e4e_projection(aligned_face, "out.pt", device).unsqueeze(0)

with torch.no_grad():

my_sample = generator(my_w, input_is_latent=True)

npimage = my_sample[0].cpu().permute(1, 2, 0).detach().numpy()

imageio.imwrite('filename.jpeg', npimage)

return 'filename.jpeg'

````

5. Build a Gradio Demo

```python

import gradio as gr

title = "JoJoGAN"

description = "Gradio Demo for JoJoGAN: One Shot Face Stylization. To use it, simply upload your image, or click one of the examples to load them. Read more at the links below."

demo = gr.Interface(

inference,

gr.Image(type="pil"),

gr.Image(type="file"),

title=title,

description=description

)

demo.launch(share=True)

```

6. Integrate Gradio into your W&B Dashboard

The last step—integrating your Gradio demo with your W&B dashboard—is just one extra line:

```python

demo.integrate(wandb=wandb)

```

Once you call integrate, a demo will be created and you can integrate it into your dashboard or report.

Outside of W&B with Web components, using the `gradio-app` tags, anyone can embed Gradio demos on HF spaces directly into their blogs, websites, documentation, etc.:

```html

<gradio-app space="akhaliq/JoJoGAN"> </gradio-app>

```

7. (Optional) Embed W&B plots in your Gradio App

It's also possible to embed W&B plots within Gradio apps. To do so, you can create a W&B Report of your plots and

embed them within your Gradio app within a `gr.HTML` block.

The Report will need to be public and you will need to wrap the URL within an iFrame like this:

```python

import gradio as gr

def wandb_report(url):

iframe = f'<iframe src={url} style="border:none;height:1024px;width:100%">'

return gr.HTML(iframe)

with gr.Blocks() a

|

Setting up a Gradio Demo for JoJoGAN

|

https://gradio.app/guides/Gradio-and-Wandb-Integration

|

Other Tutorials - Gradio And Wandb Integration Guide

|

``python

import gradio as gr

def wandb_report(url):

iframe = f'<iframe src={url} style="border:none;height:1024px;width:100%">'

return gr.HTML(iframe)

with gr.Blocks() as demo:

report_url = 'https://wandb.ai/_scott/pytorch-sweeps-demo/reports/loss-22-10-07-16-00-17---VmlldzoyNzU2NzAx'

report = wandb_report(report_url)

demo.launch(share=True)

```

|

Setting up a Gradio Demo for JoJoGAN

|

https://gradio.app/guides/Gradio-and-Wandb-Integration

|

Other Tutorials - Gradio And Wandb Integration Guide

|

We hope you enjoyed this brief demo of embedding a Gradio demo to a W&B report! Thanks for making it to the end. To recap:

- Only one single reference image is needed for fine-tuning JoJoGAN which usually takes about 1 minute on a GPU in colab. After training, style can be applied to any input image. Read more in the paper.

- W&B tracks experiments with just a few lines of code added to a colab and you can visualize, sort, and understand your experiments in a single, centralized dashboard.

- Gradio, meanwhile, demos the model in a user friendly interface to share anywhere on the web.

|

Conclusion

|

https://gradio.app/guides/Gradio-and-Wandb-Integration

|

Other Tutorials - Gradio And Wandb Integration Guide

|

- Create an account on Hugging Face [here](https://huggingface.co/join).

- Add Gradio Demo under your username, see this [course](https://huggingface.co/course/chapter9/4?fw=pt) for setting up Gradio Demo on Hugging Face.

- Request to join wandb organization [here](https://huggingface.co/wandb).

- Once approved transfer model from your username to Wandb organization

|

How to contribute Gradio demos on HF spaces on the Wandb organization

|

https://gradio.app/guides/Gradio-and-Wandb-Integration

|

Other Tutorials - Gradio And Wandb Integration Guide

|

The Hugging Face Hub is a central platform that has hundreds of thousands of [models](https://huggingface.co/models), [datasets](https://huggingface.co/datasets) and [demos](https://huggingface.co/spaces) (also known as Spaces).

Gradio has multiple features that make it extremely easy to leverage existing models and Spaces on the Hub. This guide walks through these features.

|

Introduction

|

https://gradio.app/guides/using-hugging-face-integrations

|

Other Tutorials - Using Hugging Face Integrations Guide

|

Hugging Face has a service called [Serverless Inference Endpoints](https://huggingface.co/docs/api-inference/index), which allows you to send HTTP requests to models on the Hub. The API includes a generous free tier, and you can switch to [dedicated Inference Endpoints](https://huggingface.co/inference-endpoints/dedicated) when you want to use it in production. Gradio integrates directly with Serverless Inference Endpoints so that you can create a demo simply by specifying a model's name (e.g. `Helsinki-NLP/opus-mt-en-es`), like this:

```python

import gradio as gr

demo = gr.load("Helsinki-NLP/opus-mt-en-es", src="models")

demo.launch()

```

For any Hugging Face model supported in Inference Endpoints, Gradio automatically infers the expected input and output and make the underlying server calls, so you don't have to worry about defining the prediction function.

Notice that we just put specify the model name and state that the `src` should be `models` (Hugging Face's Model Hub). There is no need to install any dependencies (except `gradio`) since you are not loading the model on your computer.

You might notice that the first inference takes a little bit longer. This happens since the Inference Endpoints is loading the model in the server. You get some benefits afterward:

- The inference will be much faster.

- The server caches your requests.

- You get built-in automatic scaling.

|

Demos with the Hugging Face Inference Endpoints

|

https://gradio.app/guides/using-hugging-face-integrations

|

Other Tutorials - Using Hugging Face Integrations Guide

|